The idea that schooling raises intelligence still prevails. The influential study review of Ceci (1991) concluded that schooling has a strong impact on IQ scores despite his final warning that observed score does not equate real intelligence. After, many more studies were published, including latent factor modeling and quasi-experimental designs. It is unclear whether education truly improves general intelligence modeled as latent factor or whether long-lasting IQ gain involves far transfer effect. More likely, the answer to all of these questions is negative.

Latent factor modeling of g

Ritchie et al. (2015) analyzed the longitudinal IQ changes using SEM on the LBC1936 data. They compared three model pathways of the latent g factor, all controlling for prior IQ measured at age 11: The first model considers education affecting subtest scores only through g, the second model considers education affecting subtest scores through g but also independently of g, and finally the third model considers education affecting subtest scores only independent of g. This last model had superior fit to the data. Later, Lasker & Kirkegaard (2022) applied the same method on the longitudinal VES data, although they modeled education status and IQ changes strictly among adults, not from children to adulthood, and reached the same conclusion. A major weakness of both studies is that they involved older adults. At this stage of life, intelligence can be affected by many external effects which may obscure educational effects, such as age-related cognitive decline.

On the other hand, Protzko (2016) dismissed the interpretation of hollow gain based on his modeling of the latent g factor. One glaring discrepancy is that Ritchie directly tested the Spearman’s hypothesis against the competing hypothesis, whereas Protzko did not.

Protzko (2016) analyzed the structure of IQ gains in the IHDP intervention of low birth weight children from ages 3 to 8. MGCFA with a two-factor model was applied to the experimental and control groups. Measurement invariance holds across groups. At age 3 there was a large advantage in the latent factor score for the experimentals, but at age 8 there was no difference in this latent g score between groups. Protzko wrongly assumed that the “IQ-raising g” hypothesis does not account for this pattern of raising latent g gains followed by a complete fade-out. The result is in fact fully consistent with Jensen (1998) and te Nijenhuis (2007, 2014, 2015) correlational analyses of g-loadings with IQ gains. A negative correlation only means that the observed IQ score would yield greater gains than latent g score, but not that there is no g gains at all.

Most importantly, Protzko fails to understand that g isn’t measured well at young ages. Of course, he tries to defend himself and reports that g at ages 3 and 8 correlates so highly (r=0.88) that they must be the same construct. Perhaps. The problem is that correlation says nothing about means. And here we are only interested in the means. If dealing with complexity increases over the course of development as Jensen (1998, p. 281) stated:

It is probably because of the g demand of reading comprehension that educators have noticed a marked increase in individual differences in scholastic performance, and its increased correlation with IQ, between the third and fourth grades in school. In grades one to three, pupils are learning to read. Beginning in grade four and beyond they are reading to learn.

It follows that gains in IQ must be greater at an early age (Jensen, 1973, p. 91) and test scores at age 3 will become too easy for children at age 6 (Jensen, 1980, p. 287) but none of these outcomes will show up in a correlation because the rank-ordering of all individuals stays the same. Generally, Jensen (1998, p. 245) emphasized that even a highly g-loaded battery can lose its g-loadings owing to artefacts such as practice effects. This is why Jensen thought of processing speed as the purest manifestation of g insofar as it reflects the quality of information processing in the brain as well as demanding controlled processing as opposed to automatic processing. Consistent with this view, a longitudinal analysis of the LBC1921/1936 reveals that education status is positively associated with IQ changes but not with processing speed assessed by RTs measured at 83/70 years (Ritchie et al., 2013).

Jensen (1998, p. 334) made it clear that no matter how strong cognitive gain would be found on a highly g loaded test, it bears no importance as long as it does not imply far transfer. This is why Ritchie’s (2013) study was so important. Quasi-experimental studies, of which account for reverse causation due to self-selection, also seem to confirm the absence of far transfer.

Far Transfer

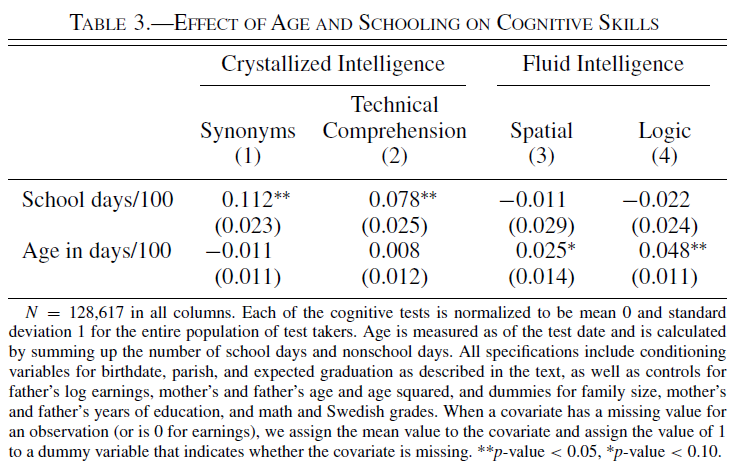

Carlsson et al. (2015) explore the causal impact of schooling on IQ by exploiting conditionally random variation in the date Swedish males take the ASVAB battery, as a preparation for military enlistment between 1980 and 1994. Such quasi-experimental design allows the separation of the effects of age and number of school days on IQ. As the authors noted: “Age at the time of the test equals cumulative school days plus cumulative nonschool days, so if all individuals take the test on the same date and start school on the same date, there is no independent variation in school days and nonschool days for individuals with the same birthdate.” From which follows the logic of their regression model: “Remembering that age equals test date minus birthdate, random variation in test date provides random variation in age only after conditioning on birthdate. Likewise, recognizing that school days plus nonschool days equals age, school days are also random only after conditioning on birthdate.” The result shows that school days affect crystallized (synonyms and technical comprehension tests) but not fluid intelligence (spatial and logic tests). The negative coefficients of schooling days on fluid ability implies that nonschool days improve fluid ability relative to school days. Students with low- and high-math/Swedish grades benefit equally from schooling in crystallized ability.

Another quasi-experimental study (Finn et al., 2014) analyzed the impact of years of charter school attendance through admission lottery in Massachusetts on the MCAS scores composed of math and English tests and a measure of fluid ability composed of processing speed, working memory and fluid reasoning tests. Controls included lagged 4th-grade scores of math and English tests, limited English proficiency, special education status and age. A variable indicating whether a student was randomly offered enrollment was used as the Instrumental Variable in order to isolate exogenous variation in years of attendance. Each additional year increases 8th-grade math score by 0.129 SD, but 8th-grade English by only 0.059 SD and fluid ability by only 0.038 SD. However Cheng et al. (2015) meta-analysis of “No excuses” charter schools experimentals reports higher gains of 0.25 SD and 0.16 SD for math and English language, respectively. The main reason seems to be that No Excuses focuses intensely on raising achievement scores of students from low-income and racial minority background. Two points should be made clear. If we focus on low-SES children, then intervention programs also fail at increasing intelligence. If quality of school rather than years truly matters more, a large review on the effect of school quality indicates no improvement in scholastic tests.

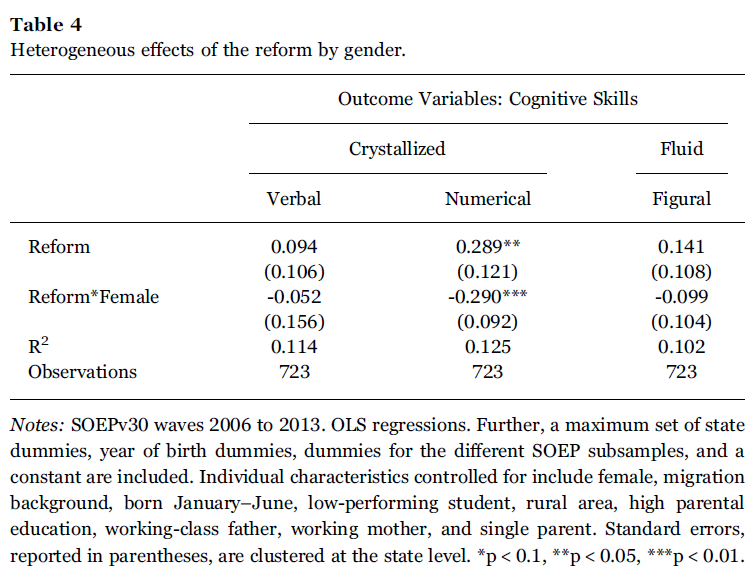

Dahmann (2017) examined the impact of instructional time and timing of instruction on IQ scores using two German data, the SOEP and NEPS. In the SOEP, the same-aged students had an average of 5.5 or 6.5 years in high school at time of interview but the amount of education varies between control and treatment group as the reform provides an exogenous variation in the number of class hours attended. The DID regression uses reform as dummy (treatment/control) variable, controls of pre-reform individual characteristics and dummy variables of state and year of birth to account for variation across time and region. In the NEPS, all students are in their final grade of high school and either in the first cohort affected by the reform or the last cohort not affected. The DID regression uses reform as dummy variable indicating whether a person is affected as well as controls of individual characteristics.

Results from the SOEP show that reform affects verbal and numerical tasks (crystallized) as well as figural tasks (fluid) by 0.094, 0.289 and 0.141 SD whereas the interaction between reform and female shows coefficients of -0.052, -0.290, and -0.099. This means instruction time has no effect among females. Results from the NEPS show that reform affects mathematics (crystallized) but also speed and reasoning tasks (fluid) by 0.003, -0.072 and -0.090 SD whereas the interaction between reform and female shows coefficients of 0.009, 0.040 and 0.017 SD. The small negative impact on fluid ability among males is either due to cohort or time-specific effects. The reform increases the gender gap by favoring males who initially had better scores, simply because the higher ability persons learn faster.

In both datasets, there is no strong evidence that schooling increases intelligence overall. A lesson from the SOEP data is that failure to account for heterogeneity such as gender effect may bias the results. This is important because studies like Brinch & Galloway (2012) who found a strong effect of a Norwegian reform increasing compulsory schooling from 7 to 9 years (i.e., for adolescents aged 14-16) examined males only.

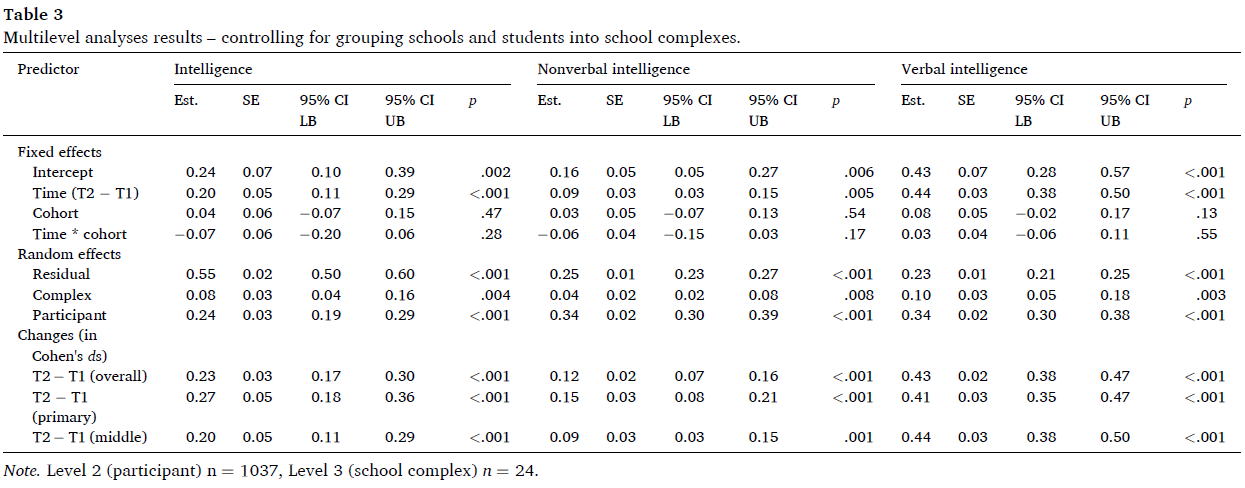

Karwowski & Milerski (2021) analyzed Poland’s educational reform of 2017 between 7th-graders of primary schools (13.38 years old) and 2nd graders of middle school (14.39 years old) at the same time. The reform increased schooling intensity by compressing 3 years of curricula into 2 years. MGCFA revealed that measurement invariance between these two cohorts at both time 1 and time 2 was tenable, however metric invariance was violated when MGCFA was applied longitudinally among 7th graders. This means their general ability assessed one year later does not elicit the same abilities. Multilevel model was applied to remove confounds between year and cohort effects. The effect sizes are strong for verbal intelligence but weak for nonverbal intelligence, especially among middle schoolers. The authors conclude with: “Indeed, the growth we observed in more “school-based” analogies and reasoning tasks was substantial … The growth in matrices was much less spectacular overall”. More evidence of lack of transfer.

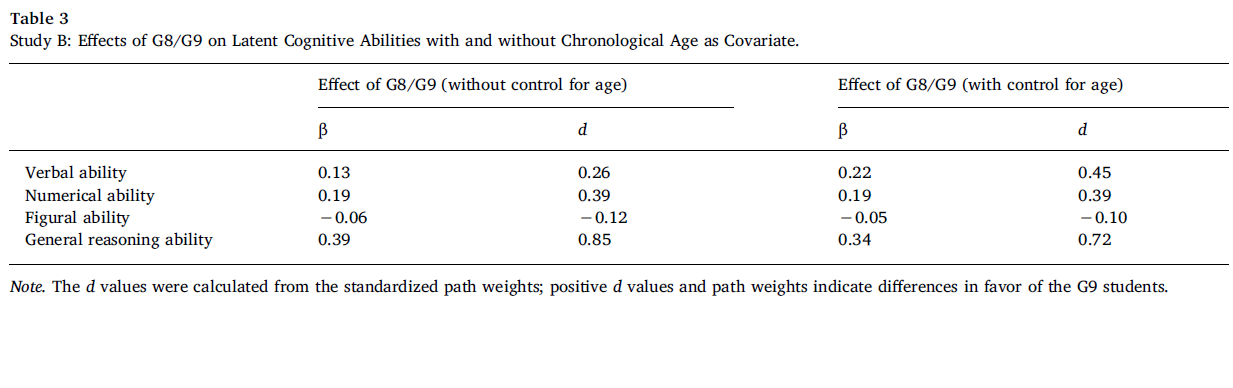

Bergold et al. (2017) analyzed the German G8 reform which shortened the duration of school attendance in the highest track of Germany’s tracked school system (Gymnasium) from 9 years (G9) to 8 years (G8) while the curricular contents were preserved in full. G9 students enrolled one year earlier while G8 students had to cope with an increased number of lessons per week. IQ test was given the same year for both groups. The ANCOVA, accounting for age, reveals a strong impact on verbal, numerical and figural ability with d=0.40, d=0.54, and d=0.38, favoring G9 students. However, when MGCFA with second-order g was applied, intercept (scalar) invariance was violated. This outcome is expected if one group is advantaged, for example, due to schooling-related knowledge elicited by the IQ subtest or item (Shepard, 1987, p. 213). This also suggests the absence of far transfer. Despite this, Bergold allows the two biased subtests to be freely estimated. The effects of the G8 reform on the second-order g and specific factors is shown in Table 3 below.

This result would have been wonderful if Bergold did not cheat by freeing the intercepts. The evaluation of general ability should better be done without measurement bias. Furthermore, unlike Dahmann, Bergold did not include gender interaction or analyzed gender separately. Two additional critical points must be considered. The small one: G8/G9 affiliation and age were highly confounded. However age has been generally found to have small effect on intelligence, especially compared to schooling. The big one: there was no clear difference in GPA between G8 and G9 students. Bergold argues that “G9 students in Study A might have used their additional year of time to practice and to apply the contents in their daily lives somewhat more than the G8 students”. But another interpretation is that schooling simply didn’t increase intelligence. Another perspective is that G8 students improved in GPA relative to G9 because of accelerated curriculum promoting academic performance. If intensive schooling enhances academic performance along with intelligence as one would expect, why G8 students are lagging so much behind in terms of cognitive abilities? There are many reasons to believe intelligence is much less malleable than scholastic achievement. As Jensen (1973, pp. 90-91) once observed, a phenomenon not widely known:

An interesting difference between scholastic achievement scores and intelligence test scores (including vocabulary) is that the latter go on increasing steadily throughout the summer months while the children are not in school, while there is an actual loss in achievement test scores from the beginning to the end of the summer.

This was later confirmed by a meta-analytic review (Cooper et al., 1996). Sharp decrease in achievement yet unaffected by either IQ level, family income, gender or race variables when considered as potential moderators. If intelligence is just as malleable as achievement, why don’t we see any significant drop in IQ during summer?

One common fallacy made salient by these economists and psychologists is the assumption that general intelligence is merely the ability to think abstractly and that fluid intelligence better approximates g. First, the pattern of correlations of fluid ability depends on how you measure it, either as traditional subtest’s g-loadings or as Raven’s loadings. Second, the crystallized/fluid dichotomy in either correlational pattern (Jensen, 1980, p. 529; 1998, p. 563) or subtests’ classification isn’t always clear as Jensen (1980, p. 234) noted: “verbal analogies based on highly familiar words, but demanding a high level of relation eduction are loaded on gf, whereas analogies based on abstruse or specialized words and terms rarely encountered outside the context of formal education are loaded on gc.” Third, Jensen (1966) long ago already warned against the proposition that nonverbal tests are culture free:

It is generally found, for example, that lower-class children, especially among the Negroes, perform better on a highly verbal test such as the Stanford-Binet than on an ostensibly nonverbal or so-called “culture-fair” test such as the Raven Progressive Matrices (Higgins & Silvers, 1958). Such facts seem puzzling until one notes the amount of verbal behavior needed to solve many of the Progressive Matrices. This type of “nonverbal” test is even more verbal in a really important sense than many tests of vocabulary or verbal analogies. Obviously verbal tests more easily arouse and elicit verbal responsiveness. A test like the Progressive Matrices, which does not pose problems in the form of verbal stimuli, has less tendency to arouse verbal mediation in subjects who for some reason have a high threshold of arousal.

Even if schooling truly improves intelligence, an unintended outcome sometimes observed is that schooling increases inequality as long as high-ability persons aren’t affected by ceiling effects. Reviewing much of this evidence, Ceci & Papierno (2005) argue that high-ability persons learn at faster rate. More recently, this outcome of widening gap has been reported by Huebener et al. (2017) in the German G8-reform on PISA scores in reading, math and science. If the purpose of educational expansion is to reduce gaps by equalizing chances, the result might be rather disappointing.

Studies generally report a 3 point IQ gain per year of schooling but no one really talks about ceiling effect. If we extrapolate to 15 years of schooling, this equals 45 points. No serious researcher truly believes this. Not only latent g isn’t modeled or far transfer even considered in the majority of studies, predictive validity was never evaluated. If predictivity is lacking, then those IQ gains are likely hollow (Jensen, 1998, pp. 331-332).

Environmental deprivation

Studies on deaf people provide the best natural experiment for these environmental-educational hypotheses. My earlier review of Braden’s book (1994) summarizes the important points. Deaf children experience a quite severe environmental deprivation, leading to social isolation which extends into adulthood. Parental practice is anything but cognitive stimulating. Mother-child interaction seems to be punitive, nonsupportive and oriented toward compliance rather than understanding. Ostracism against deaf children occurs in schools. Despite all this, deaf people perform almost equally well as normal-hearing people on performance IQ but 1 SD lower on verbal IQ. In a factor analysis, hearing loss, academic achievement and verbal IQ load on a common factor, but not nonverbal factor. In a correlated vector analysis, the environmental deprivation owing to deafness is inversely related with subtests’ g-loadings (Braden, 1989).

If social interaction and academic achievement were the causal factor of intelligence, then performance IQ would also be greatly affected. By the same token, cultural-social multiplicative effects as described by Dickens & Flynn (2001) or Kan (2011) must be rejected. Braden concluded that the study of deaf people provides one of the strongest evidence against commonly proposed theories of environmental deprivation and, by the same token, one of the strongest evidence against the view that racial differences are entirely due to environmental factors.

Discover more from Human Varieties

Subscribe to get the latest posts sent to your email.

What is your opinion about this idea like below?

《In this view, whether increased problem solving is due to an increase in g or non-g abilities is irrelevant. People are still getting better at solving problems, no matter what abilities they may (or may not) use. If there are multiple paths to solving problems correctly, then privileging one path (e.g., relying on a general intellectual ability like g) does not make much sense. It is a very pragmatic view that I am sympathetic towards.》

-DO NON-G GAINS FROM THE FLYNN EFFECT MATTER? YES AND NO

JAN 3, 2021RUSSELL T. WARNE

I haven’t read this one but generally I appreciate Russell’s articles. I have to say, I’m a bit surprised here. The very reason it matters a lot is because g has high predictive validity, compared to non-g abilities. Sure, non-g matters, and perhaps somewhat more for some people as certain situations require specific abilities. First instance that comes to mind is Woodley’s cognitive differentiation-integration. But globally speaking, it seems complexity demand hasn’t decreased. So I would agree with Warne only if g versus non-g outcome doesn’t have real life consequence.