Despite the US Supreme Court recently overturning affirmative action (AA), many scholars believe that AA bans in higher education hurt minorities’ opportunities because affirmative action actually delivered on its promises. Although the findings lack consistency, the impact of AA on the outcomes of under-represented minorities (URM) is generally either ambiguous or slightly negative. The bans exert a less negative effect on more competitive fields such as STEM. The picture is even worse when one considers that AA comes at the cost of lowering the chance of other, more capable minorities, such as Asians, and does not greatly impact the intended targets, i.e., the impoverished families among URMs.

CONTENT

1. Bias in AA studies: unobservables and measurement issues

2. Affirmative action: impact of school selectivity

3. Affirmative action: education outcome for minorities

4. Race-blind AA in the U.S.

4.1. Race-blind AA in the U.K.

5. Affirmative action bans

6. Differential effect of AA in STEM?

7. Reinstatement of Affirmative Action

8. Affirmative Action against Asians

9.1. Affirmative action in Brazil

9.2. Affirmative action in India

9.3. Affirmative Action in Colombia

9.4. Affirmative Action in Sri Lanka

10. Nail in the coffin?

A first annoyance when it comes to reviewing the evidence is the tremendous number of papers published thus far, several hundred, far exceeding my patience. Most papers were reviewed by others, and the ones reviewed in detail in this article are those I deem to be either important or overlooked or rarely cited. A second annoyance is the lack of congruence, due to several research failing to replicate or due to using different samples or model specifications or definitions of variables. A third annoyance is that authors sometimes claim their analysis supports their “preferred” hypothesis while the numbers say a different story. The literature is riddled with hypocrisy and dishonesty.

1. Bias in AA studies: unobservables and measurement issues.

Among several arguments used against AA, the mismatch is the most debated one (Lempert et al., 2006; Sander, 2005, 2013, 2014; Thaxton, 2022). Undermatch happens when high ability students attend relatively low quality colleges and overmatch happens when low ability students attend relatively high quality colleges. The mismatch theory posits that mismatches cause students to perform worse than if they were well matched. The evidence of overmatch effect lowering students’ performance, compared to their well-matched peers, is seen as a failure of AA policies to improve the outcome of disadvantaged students (often blacks and hispanics) by offering them access to more selective colleges. A related argument is the possible interaction of college quality with student ability which shows the heterogeneity of the effects and forms the basis of the mismatch hypothesis.

Some definitions need to be clarified. Arcidiacono et al. (2011a) distinguish between local and global mismatch: the former effect happens when the marginal students are made worse off while the latter effect happens when the group is made worse off. Sen (2023) distinguishes between mismatch based on test scores and based on readiness for university. Sen argued that the absence of score mismatch does not rule out readiness mismatch, which may be unobserved, because “If students from disadvantaged backgrounds, who are admitted to university through [AA], possess less knowledge about the necessary skills for success in a university setting, it is expected that these students would experience lower academic and labor market outcomes.” (p. 27). Yet the usual test of mismatch involves the former, rarely the latter. Dillon & Smith (2017) argued that mismatch may still occur due to imperfect information of the student’s true ability. That is, SAT score alone isn’t enough.

Upon examining mismatch effects, the result from different studies is rarely consistent. Confounding factors lead to weak inferences of the causal effect of college quality, and produce inaccurate mismatch effects. As Arcidiacono & Lovenheim (2016) noted:

This difficulty is due to the fact that students select schools and schools select students based on factors that are both observable and unobservable to researchers. […] Thus students who are similar in terms of observables but who go to law schools of differing quality likely also differ in terms of these unobservable factors: the students attending more-elite law schools have higher unobserved ability, conditional on observed ability measures. […] As this discussion highlights, the validity of the different approaches to measuring quality and matching effects in law school rely strongly on the underlying assumptions about how student unobserved ability is distributed across college quality tiers, across racial groups within each tier, and across racial groups.

But even correction for just observables can mask heterogeneity. Absence of selection on observables on average can also result from biases that cancel out across the distribution of college quality.

Failure to account for selection leads eventually to dramatically different conclusions. Bowen & Bok (1998) collected data on 28 selective US colleges. They found rather strong selectivity tiers effect but no evidence of mismatch effect, yet they did not address the issue of selection bias, or at least not directly (see their book: 1998, pp. 127-128). Dale & Krueger (2002, Tables 7-8) reanalyzed their data and use alongside the NLS-72 data. After correcting for selection bias, the positive impact of college selectivity disappears. However, they found a negative coefficient of the interaction between parental income and school SAT, which indicates that students from low-income families earn more by attending selective colleges. Dale & Krueger (2002, 2014) provide an illustration of this self-selection bias:

For example, past studies have found that students are more likely to matriculate to schools that provide them with more generous financial aid packages. … If more selective colleges provide more merit aid, the estimated effect of attending an elite college will be biased upward because relatively more students with greater unobserved earnings potential will matriculate at elite colleges, even conditional on the outcomes of the applications to other colleges. If this is the case, our selection-adjusted estimates of the effect of college quality will be biased upward. However, if less-selective colleges provide more generous merit aid, the estimate could be biased downward. More generally, our adjusted estimate would be biased upward (downward) if students with high unobserved earnings potential are more (less) likely to attend the more selective schools from the set of schools that admitted them.

A related issue is how college quality/selectivity is typically measured. Williams (2013, Tables 6-7) used a 2SLS model with being admitted to first-choice law school as instrument to correct for selection on unobservable and grouping selectivity tiers into selective (top two tiers) and non-selective (bottom two or four tiers) as a way of dealing with measurement error since that grouping mitigates the problem of overlapping tiers. Williams believes that using the bottom two tiers (i.e., removing the two middle tiers) is a better way of correcting for noisy tiers. Based on BPS data, there is a negative effect of selectivity on graduation among blacks despite the coefficients being non significant at p<0.05 (due to the SEs being very large) regardless of whether non-selectivity is defined as bottom two tiers or bottom four tiers. On the other hand, there is a negative and significant effect of selectivity on bar passage regardless of how non-selectivity is defined. Camilli & Welner (2010, p. 517) argue that middle tier is the best counterfactual, and removing it decreases the quality of the ATT estimator (i.e., Average Treatment of the Treated) and its real world validity. Removing a tier also substantially reduces the sample size. Camilli et al. (2011, Table 4) own analysis of the BPS, based on propensity score matching and using similar grouping of selectivity tiers (1-2 for elite and 3-6 for nonelite), found a negative match (often non-significant due to small sample sizes) for least-credentialed black students and Asian students but more generally a weak support for the mismatch although they did not correct for selection bias.

Black & Smith (2006) argue that most studies likely underestimate the college quality effect due to measurement error caused by using only one indicator. They analyze 5 indicators: faculty-student ratio, rejection rate of applicants, freshman retention rate, SAT score of the entering class, and mean faculty salaries. After comparing multiple estimation methods, e.g., factor analysis, instrumental variable, GMM, they found that GMM estimator produced the most reliable estimates. According to them, factor analysis suffers from attenuation bias and IV suffers from scaling problems (i.e., indicator and latent variables not sharing the same scale) but GMM overcomes these issues. They also found that the school SAT is the single most reliable indicator of school quality.

Mountjoy & Hickman (2020) take issue with the college quality measure based on observed variables and propose instead the college value-added measure: “More broadly, by letting each college have its own unique impact on student outcomes, our value-added approach lets the data determine the ordering of the quality space, rather than imposing an ex-ante ordering based on a single-dimensional college observable.” (p. 33). In their analysis of Texas public universities, each college has its own treatment effect (value-added) by using UT-Austin as comparison school against which all the others are measured. They found that a college’s value-added on the probability of completing a STEM (non-STEM) major correlates strongly (not at all) with its earnings value-added. They also found (Figures 14-15) no heterogeneity of attending a given selective college across student characteristics, including race, family income, pre-college cognitive and non-cognitive skills, which might be evidence against mismatch effect.

There is an added difficulty when measuring the impact on earnings. Altonji and Zhong (2021) listed three reasons why earnings could differ across periods: changes in ability and preferences, experience in job market that affects occupational choice, experience that affects occupation-specific earnings. A more appropriate model is to use individual fixed effects and individual-specific trends, as proposed by Stevens et al. (2019). While it addresses concerns about secular earnings growth, it does not account for bias stemming from secular earnings that occur post-enrollment.

Another, related confound not often discussed is the change in students’ qualifications over time. If minorities improve their admissions index score, as may happen (Lempert et al., 2006, p. 51), then their graduation rates also will improve over time, and this ultimately will affect the magnitude of mismatch effects differentially across cohorts. For instance, Smith et al. (2013) found that undermatch has decreased between 1992 and 2004, in part because of students being more likely to apply to a matched college, and in part because of changing college selectivity over this time period.

Perhaps the least discussed issue is the high correlation between student ability and college quality. When two variables are so highly correlated, a recommended method is to employ multilevel models to properly separate their effects. I provided an illustration earlier. Smyth & McArdle (2004) used this technique. They showed that ignoring nested structure overestimates the impact of college quality.

Anyone willing to learn more should check Thaxton’s (2020) detailed article illustrating many possible biases that weaken causal inferences (see, e.g., pp. 861-862, 870, 881, 887, 894-896, 899, 907-908, 930).

2. Affirmative action: impact of school selectivity.

The proponents of AA often insist on the importance of school quality. Some scholars prefer using selectivity as opposed to school quality due to the ambiguity of the latter term. Arcidiacono & Lovenheim (2016, pp. 29-32), and Lovenheim & Smith (2022, pp. 42-55, 64-67, 69-70) already reviewed a large number of past research, concluding that the effect of selectivity on income is positive. There are a few studies however worth discussing, as they not only highlight important methods but show that the positive outcomes aren’t restricted to the United States.

Brand & Halaby (2006) analyzed a sample of 10,317 graduates in 1957 using the WLS data. School selectivity is based on national rankings supplied by Barron’s index. They employ a matching estimator method which, unlike traditional regression, does not assume linearity in covariates: “In contrast, matched control units serve as observation-specific counterfactuals for each treated unit, thereby avoiding bias due to misspecification of the functional form.” (p. 757). They compute 3 effects: 1) average treatment effect (ATE), i.e., the expected effect of attending an elite college on a randomly drawn unit from the population, 2) average treatment on the treated (ATT), i.e., the effect of attending an elite college for those who did attend, 3) average treatment on the controls (ATC), i.e., the effect of attending an elite college for those who actually attended a non-elite college. Their model includes covariates such as family background indicators, class rank, IQ, college track, math ability, private/public high school. The estimates adjust the within-match mean differences in the outcome variables for post-match differences in covariates. Results show that after controlling for pre-college covariates, which likely influence selection into elite colleges, there is only a strong positive impact of selectivity on educational attainment (bachelor, PhD) and early, mid-career, late-career occupational status with respect to ATE. There is a positive effect of selectivity on the likelihood of obtaining a bachelor’s degree among ATT (6.7%) and ATC (24%) groups, of obtaining a PhD among ATT (12%) and ATC (13%) groups, but there is a modest effect on late-career occupation.

Dale & Krueger (2014, Tables 5-6, 8-9) examine how college selectivity (or quality) affects future earnings while controlling for selection bias owing to unobserved student characteristics, by using as proxy the average SAT score of the colleges that students applied to (which they call a self-revelation variable). The rationale for this approach is that “individuals reveal their unobserved characteristics by their college application behavior” or more specifically “that students signal their potential ability, motivation, and ambition by the choice of schools they apply to” (p. 327). The data is based on the 1976-1989 cohorts of the C&B Survey. Their earnings comes from administrative data and thus are much more reliable than self-reported earnings. College selectivity is measured with either college SAT, net tuition or Barron’s index. The basic regression model adjusts for race, sex, predicted parental income, student SAT and GPA, and the self-revelation model adds unobservables and dummies of the number of applications the student submitted. In the basic model, college quality predicted higher earnings and its effect increased over time (e.g., reaching 10%) regardless of the college selectivity measures. However, in the self-revelation model, the coefficients were much smaller (generally 1-2%) and non-significant despite the large sample size. The analysis is reconducted by including only blacks and hispanics in the 1989 cohort (N=1,508). Selectivity measured with school SAT, net tuition, Barron, displays coefficients of 0.076 (SE=0.042), 0.138 (SE=0.092), 0.049 (SE=0.046), respectively, which indicates between 4.9% and 13.8% increase in earnings depending on the measure although most coefficients have large clustered SEs. A final analysis which includes school SAT and parent education as main and interaction effects shows that attending a high-SAT school improves students’ earnings for parents with 12 years of education but not at all for parents with 16 years of education.

Melguizo (2008) analyzes the NELS:88/2000 and uses a similar strategy to control for selection bias by including the average SAT score of the schools that students applied to. The model includes race/gender dummies, SES, senior test scores, participation in high school extracurricular activities (as proxy for motivation), academic preparation (following a college preparatory program in high school versus general/vocational program). College selectivity (using Barron’s index) improves college completion but the coefficients diminished somewhat after correction for unobservables following Dale & Krueger’s approach, suggesting upward bias in college quality effects due to self-selection. Matching estimator shows that blacks and hispanics gain more than whites from selective schools.

Andrews et al. (2016, Table 4) evaluate quantile treatment effects of attending flagship universities such as UT Austin and Texas A&M as opposed to non-flagship in Texas. After controlling for background, math, reading, writing sections of the TAAS as well as relative rank, both Asians and whites benefit greatly from attending either Austin or Texas A&M while blacks and hispanics benefit greatly only from attending Texas A&M.

Anelli (2020, Table 4) examines Italian students who graduated from college-preparatory high schools between 1985 and 2005 in a large city. The model employs regression discontinuity (RD) with gender, parental house value as controls, dummy for students commuting from outside the city, and admission test year as fixed effects, and admission score in deviation from cutoff as running variable. RD requires local randomness, i.e., that the probability of treatment around the threshold does not depend on observables or unobservables that are correlated with the outcome variable (i.e., no selection bias). This random assignment assumption is confirmed by the absence of discontinuity in the density of applicants at the admission threshold as well as by the pre-treatment characteristics varying smoothly across the threshold. The discontinuity effect (i.e., how much the regression line “jumps” by crossing the cutoff) has a coefficient of 0.381 in log income for attending the elite university. The estimates are robust since there is no discontinuity in score above cutoff when missing income or zero income is used as dependent variable.

Canaan & Mouganie (2018) analyze French students who marginally passed the Baccalaureate exam in the academic year 2001-2002, based on data from the statistical office INSEE. They use a regression discontinuity (RD), for which the identifying assumption is that all determinants of future outcomes vary smoothly across the threshold, which means that any discontinuity at the threshold is interpreted as a causal effect. In this case, threshold is scoring above 10 (out of 20) on the Baccalaureate exam at first attempt. The random assignment is confirmed by the absence of discontinuity in the Brevet and math exam scores as well as the smoothness (across the threshold) of covariates known to affect education and wages. The RD model includes a dummy indicating whether a student passed or failed the exam, a function which captures the relationship between the running variable (which determines who get “treated”) and dependent variable, and covariates which include exam specialization fixed effects, date of birth, number of siblings, birth order, SES, Brevet examination score, and grade 6 Math exam. Higher quality school is defined as having higher average Baccalaureate score. They found discontinuity (i.e., threshold crossing) has no impact on having post-Baccalaureate degree or more years in education but has a strong positive impact on school quality and being enrolled in a STEM major and increases 2011-2012 earnings by 13.6%. Since no other school outcome other than quality changes at the threshold, the only channel leading to greater earnings seems to be school quality. The effect on earnings is not confounded by employers who could use passing at first attempt as a signal of productivity since there is no discontinuity among students who did not attend college.

Jia & Li (2021, Tables 3-5) use a sample of Chinese college graduates from the Chinese College Student Survey, wave 2010-2015, to examine how the Gaokao national exam score affects elite college enrollment (which is defined as national first-tier in recruitment process). The RD model includes score above cutoff, score deviation from cutoff as running variable, the linear or quadratic interaction term of this running variable with score above cutoff, as well as province-year-track fixed effects. Sampling weight is applied. The random assignment holds since the exam score distribution and covariates (e.g., age, repeating exam, rural, parent’s income/degree) showed no discontinuous jump at the threshold. Results show that scoring above the cutoff increases admission by 18%, is negatively associated with majoring economics, finance and law, and is positively associated with majoring in humanities fields, but has no effect on majoring in STEM. Standard errors are large for most estimates. Furthermore, scoring above cutoff increases monthly wage by 5.2% or 9.2% depending on whether interaction controls are linear or quadratic. The estimates are robust to missing wages.

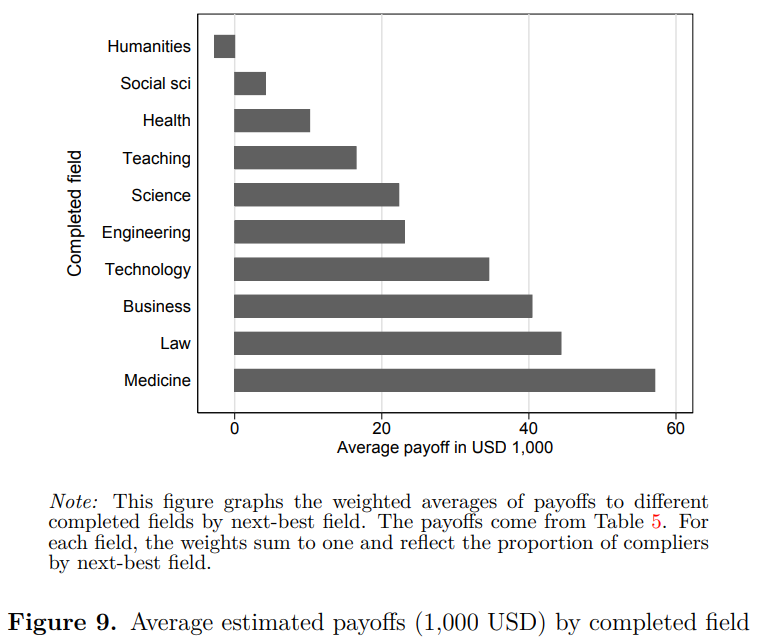

While not denying the positive effect of college selectivity/quality, the choice of field is no less important in determining wage premiums. Kirkebøen et al. (2016, Figures 6 & 9) examines the Norwegian administrative data for the years 1998-2004. Students apply to a field and institution simultaneously. In this admission process, applicants who score above a certain threshold are much more likely to receive an offer for a course they prefer as compared to applicants with the same course preferences but marginally lower application score. The best ranked applicant gets his preferred choice, the next ranked applicant gets the highest available choice for which he qualifies, and so on. The method employs a 2SLS model with dummy of completed field, fixed effects for preferring field, and controlling for gender, cohort, age at application and application score. Selection bias is accounted for by using next-best alternative field as an instrument, which is essential to identify treatment effects when there are multiple unordered treatments. Payoffs are much larger for Engineering, Science, Business, Law, and Technology compared to Health, Social Science, Teaching and Humanities.

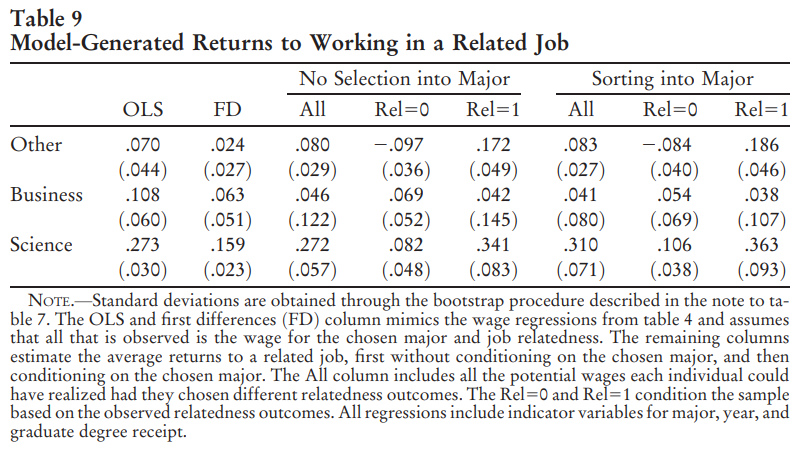

Another confound is job occupation. Kinsler & Pavan (2015) analyze the 1994 wave of the Baccalaureate & Beyond data to investigate how job occupation influences earnings. They use Roy’s self-selection model in which the workers first select into a major and then into a job type according to their unobserved skills. Models assuming no selection into a major show that science, business, and other majors choosing to work in related job gain 0.341, 0.042, and 0.172 points, respectively, in log wage as compared to their fixed effect estimates of 0.159, 0.063, and 0.024 points. These results highlight the downward bias produced by a fixed effect approach but also the heterogeneity of the wage premium owing to job types.

Applying Roy’s model on german data containing apprenticeship training, Eckardt (2020, Table 6.1) found that workers trained in their current occupation earn 12% more than those trained outside their occupation or 10% after controlling for occupation-specific experience.

3. Affirmative action: education outcome for minorities.

Because AA is supposedly able to identify students with potential but weak preparation, one belief is that less prepared students should catch up to their more prepared counterparts over time. Arcidiacono et al. (2012) showed that the reduction in black-white gap in grades over time is entirely due to differential course selection and decreased variance in grade due to upper censoring. At Duke university, 54% of black men having initial interest in sciences, engineering and economics switch to humanities and social science compared to 8% of white men. This is because natural science, engineering and economics courses are more difficult and more harshly graded than humanities and social science courses, and racial disparities in switching behaviour is fully accounted for by student’s characteristics (Elliott et al. (1996) and Heriot (2008) also argued that the prevalence of blacks switching out of STEM is due to their poor competitive position in a highly competitive field rather than institutional racism). The differential grading practice must be taken into account. This is done (Table 3) by computing class rank adjusted for average course grades. Doing so reveals a small black catchup between year 1 and 4. A further step is taken to adjust class rank for the differential ability sorting that occurs across classes, which causes the black catchup to disappear.

Ciocca Eller & DiPrete (2018) use the ELS 2002 to identify the sources of the black-white gap in bachelor’s degree (BA) completion and attainment. A first counterfactual is used to simulate black outcomes if they were given the same probability of entering 4-year college as white students with the same pre-college dropout risk, since blacks are more likely to enroll in 4-year college given equal dropout risk. This model shows a decline in blacks’ entry rate from 37.7% to 33.1% and dropout rate from 50.4% to 48.4%, and these adjustments would reduce BA recipients among blacks from 18.7% to 17.1%. In a second counterfactual, given equal dropout risk of entering black and white students, the blacks’ earned BAs decline from 200,000 to 140,000. This reveals the core of the paradoxical persistence: blacks’ increased willingness to enroll in college causes them to have higher dropout risk but also higher chance of getting BAs than otherwise. This pattern is observed as well across college selectivity levels. Dropout risk has less impact on blacks’ decision to select the most selective schools, resulting in a much higher dropout risk for blacks compared to whites who attend these schools. This overmatch to highest quality colleges among blacks may be due in part to affirmative action. A regression of college dropout on black, dropout risk, college quality main and interaction effects, shows that college quality reduces college dropout of blacks with higher dropout risk from 51% to 35%. A counterfactual, which assigns black students (among those who attend the most selective schools) to similar college quality as white students with equal dropout risk, estimates that overmatch would actually lower dropout rate of this subgroup of blacks by 0.4%. When counterfactual quality variable is used for all black students, BA attainment increases from 18.7% to 19.8%. Overall, overmatch doesn’t lower or increase blacks bachelor’s degree attainment while college quality matters more.

Arcidiacono (2005, Tables 12-14), using data from the NLS72, simulated the effects of removing black advantages in admission and in financial aid. The models account for unobserved ability as it may cause serious bias: “For example, someone who has a strong preference to attend college but is weak on unobservable ability may apply to many schools, be rejected by many schools, and have low earnings. Similarly, a person with high unobserved ability may apply to one school and get an outstanding financial aid package.” (p. 1498). Removing both affirmative action in financial aid and in admissions has a negative but small effect on black male earnings (14 years later) across nearly all percentiles of unobserved ability, and this despite blacks enjoying much larger premiums to attending college than their white counterparts (pp. 1506-1507). Removing the black male advantage in admissions has a small effect on the number of blacks attending college, with drops of 2.3% and 1.9% for models without and with unobserved ability, respectively. The drop in black males attending colleges with SAT>1200 is more than offset by the increase of black attending colleges with SAT>1100.

Arcidiacono & Koedel (2014, Tables 4-5) analyze data from Missouri, among 13 campuses. They calculate the graduation probabilities, conditioning on ACT scores, student’s high school class rank (while applying Heckman correction of selection bias), high school fixed effects. At the 95th percentile of ability, the most selective colleges display higher graduation rates for both STEM and non-STEM entrants among blacks. But as ability decreases for blacks, e.g., 25th percentile and lower, there is no clear evidence that the more selective colleges display higher graduation rates among STEM entrants but more selective colleges still display larger rates among non-STEM entrants. Matching effects are therefore more important in STEM field. A counterfactual sorting model where blacks sort to colleges similar to whites, which somehow simulates the removal of AA at top schools, shows that it would reduce the black-white graduation rate gap by 14.7% and 5.6% among women and men, respectively.

Ayres & Brooks (2005) analyze law school students using the 1991 LSAC-BPS data. The regression model controls for college quality tier and the number of schools to which the students applied (conditioning on being accepted by more than 1 school including first choice) to limit selection bias. The key assumption is that first-choice attended should be a more elite school than is second and lower choices. The positive coefficient of second-choice for predicting both GPA and bar passage within 5 years indicates there is a mismatch effect, but its non-significance for predicting bar passage suggests mixed evidence of mismatch. The result is similar whether the entire sample or black sample is analyzed. It is unclear whether or how such second-choice analysis is affected by selection bias (Lempert et al., 2006, pp. 35, 39; Thaxton, 2020, pp. 890-892, 941-943).

Fischer & Massey (2007) used the NLSF data, with a sample of students who entered 28 selective US colleges in 1999. Two measures of AA are used: 1) the individual AA, by taking the magnitude of student score below the institution average, 2) the institution AA, by taking the difference between the SAT of blacks or hispanics and all students at a given institution. Their regressions control for parent SES and academic preparation and psychological preparation. They report a positive (negative) effect of individual (institutional) AA on GPA, however, the coefficients were not only close to zero but the SEs were large, ultimately resulting in p<0.10. And although the effect of individual AA on leaving college was negative, the positive effect of institutional AA on leaving college is ambiguous. More importantly, their analysis did not account for major choices and did not report the effect of AA on graduation rate or earnings. They did not discuss possible bias due to unobservables. Massey & Mooney (2007) used the NLSF data, using the same analyses as in Fischer & Massey above, but this time evaluating AA measures on minority, athlete and legacy students. Not only the same methodological issues emerge, but the AA measures once again displayed very weak and imprecise estimates. Astonishingly, they seem very confident that they rejected the mismatch effect.

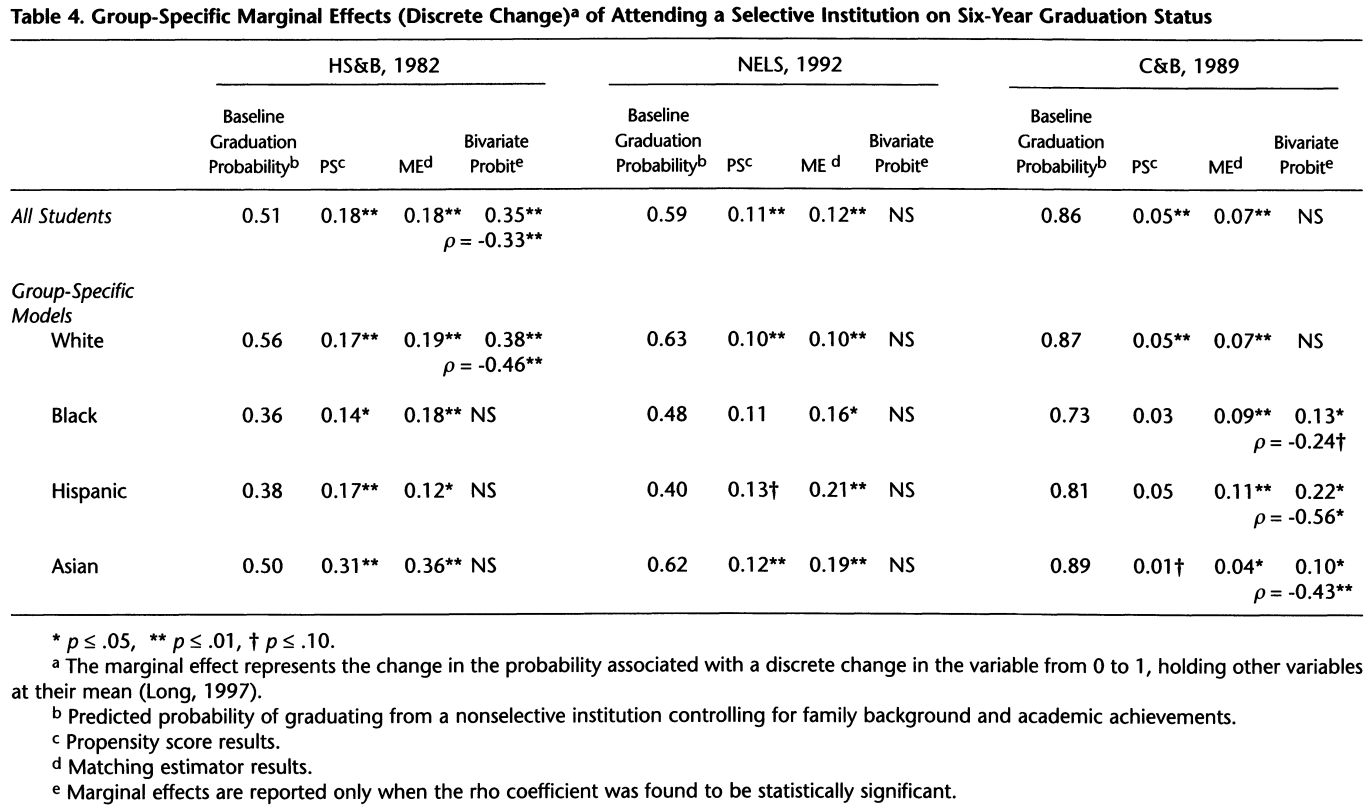

Alon & Tienda (2005, Table 4) use the HS&B-1982, NELS-1992 and C&B-1989 data. They employ two techniques to circumvent selection bias: propensity score and matching estimator. The propensity score accounts for differences in treatment effects between treated and control groups and describes how likely a unit is to have been treated, given its covariate values. The matching estimator matches individuals on the same set of covariates to obtain the average treatment effect. To control for bias due to selection on unobservables, they use a joint estimation of the likelihood of enrollment and likelihood of graduation from selective schools. Models include background and academic preparation (e.g., high school class rank, and SAT) as covariates. Based on propensity score and matching estimator methods, respectively, school selectivity (based on the entering class SAT) improves the probability of graduating for whites (17%, 19%), blacks (14%, 18%), hispanics (17%, 12%), Asians (31%, 36%) in the HS&B-1982 data, but with a smaller effect on the probability of graduating for whites (10%, 10%), blacks (11%, 16%), hispanics (13%, 21%), Asians (12%, 19%) in the NELS-1992 data, and a much smaller effect on the probability of graduating for whites (5%, 7%), blacks (3%, 9%), hispanics (5%, 11%), Asians (1%, 4%) in the C&B-1989 data. An examination of the baseline graduation rates across groups indicates that the relative gain from selective schools is higher for all minorities (including Asians) compared to the white group. But regarding the mismatch, it appears to be rejected. These authors however did not control for the interaction between school selectivity and student ability, and therefore did not evaluate ability versus selectivity effects.

Dillon & Smith (2013, Tables 5A-5B) evaluate the mismatch effects by comparing latent student ability (based on the ASVAB-g) to latent college quality in the NLSY97, with overmatching (undermatching) indicating lower (higher) score than the school average. If the mismatch hypothesis is correct, race-based AA should increase the probability of overmatch for minority students compared to whites, conditional on their measured ability. College quality is a latent measure obtained with a principal component of student’s SAT, percent of applicants rejected, mean salary of all faculty engaged in instruction, faculty-student ratio, colleges with and without SAT/ACT score. Since less selective colleges do not require SAT/ACT, excluding colleges with no SAT/ACT scores will produce bias. In a multivariate analysis based on latent college quality and controlling for ASVAB g and non-g factors, GPA, SAT, wealth, parent education, region etc., mismatches were non-existent for Blacks and Hispanics compared to whites but overmatch was higher (.084) and undermatch lower (-.110) for Asians compared to whites when college quality excludes less selective schools, however overmatch and undermatch were substantial for Blacks (.084 and -.110) and somewhat for Hispanic (.06 and -.051) and Asians (.049 and -.088) when college quality includes less selective schools. When mismatch is defined based solely on student SAT relative to the average SAT of the class at his college, the mismatch runs in the opposite direction for Blacks and Hispanics, yet this measure of mismatch must exclude less selective schools (halving the sample size). Their result is somewhat ambiguous since they analyzed the entire distribution of colleges instead of elite colleges. Arcidiacono et al. (2011b) found that the black share has a U-shape pattern across college quality regardless of region, with high black share at low/high school SAT and very low black share at medium level of school SAT. This is likely the result of differential sorting effects, with racial preferences for blacks at the most selective colleges negatively impacting the black share at the middle selective colleges. Dillon & Smith (2017, Tables 5-6) re-analyzed the NLSY97 and reported similar numbers. Another important finding of Dillon & Smith is that the student’s “information” about college (using proxies, e.g., share of high school graduates going on to a 4-year college and living in census tracts with more college graduates) increases the probability of overmatching, which indicates that in this data at least, overmatch is the result of student’s choice and better information about colleges.

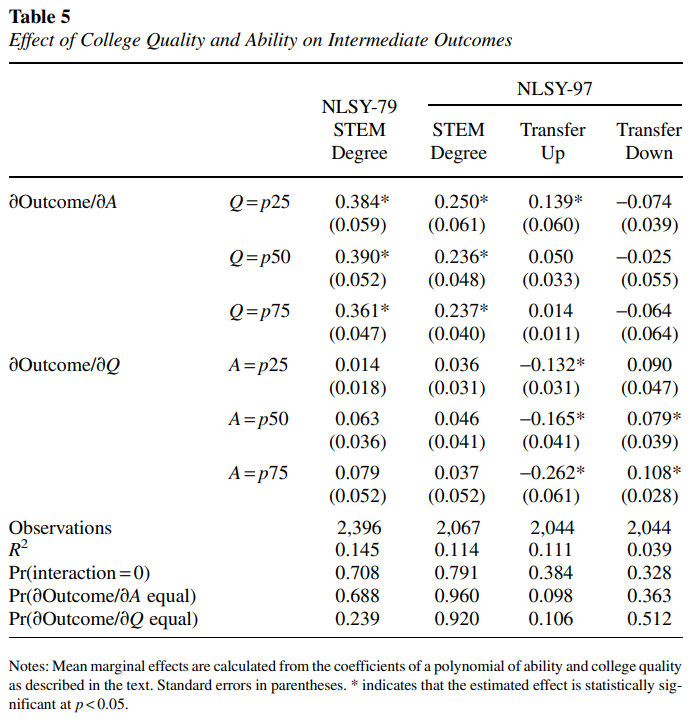

Dillon & Smith (2020, Tables 3-6) evaluate the importance of academic match between students and college by using standard models with student ability (IQ), college quality and their interaction, and background to predict six outcomes (graduation within 4-year, within 6-year, obtaining STEM degree, transfer to a higher- or lower-quality college, and 2-year income) in the NLSY79 and NLSY97. Their large set of covariates ensures that selection bias is minimized. They found that college quality and student ability are both strong predictors of graduation and earnings in both data sets, although for graduation the coefficients of college quality (student ability) increased (decreased a little) between NLSY79 and NLSY97. Interestingly, student ability is strongly related to obtaining a STEM degree while college quality shows a near-zero relationship. Another analysis involves counterfactuals to simulate reassignments of students from their actual outcome to a matched college or a 90th percentile college. Attending a top college, compared to a matched college, substantially increases the graduation and future earnings but displays inconsistent effect on obtaining STEM degree (negative in the NLSY79 and positive in the NLSY97). It is worth noting that their student-college sorting does not involve social class matching.

Lutz et al. (2018) use the ELS, wave 2004, to evaluate the impact of mismatch on graduation and GPA in selective colleges. Mismatch is calculated as student SAT minus college SAT and selectivity is defined according to the U.S. News and World Report for 2004. Their regression includes HSGPA, Advanced Placement courses, whether attended a public school, students’ college experiences, and parents’ SES. In the model that predicts graduation, there is a modest negative (or positive) coefficient for the mismatch variable before (or after) controlling for covariates, but in both cases, the estimates are not significant. The interactions between mismatch and blacks/latinos or Asians all have negative coefficients but are not significant again. In the model that predicts cumulative GPA, there is a small negative coefficient for mismatch after controlling for covariates, and no interaction with either race. This means that the larger the mismatch, the lower is the college GPA. Selection bias is not addressed however.

Golann et al. (2012, Table 3) use the NSCE data to examine the mismatch effect among hispanics. They use simplistic models with race dummies, social class, college selectivity and SAT. They found that hispanics, blacks, Asians have higher odds or six-year graduation rates compared to whites (only hispanic group has a significant coefficient despite lower point estimate than black and Asian groups) despite all minorities having lower class rank at graduation compared to whites. That is, there is no mismatch effect. Neither did they account for selection bias nor did they use multilevel models.

Angrist et al. (2023) use data from the Chicago Public School district, where most CPS students are black or hispanic and from low-income families. AA at CPS exam schools operates by reducing cutoffs for applicants from lower-income neighborhoods. To identify exam school effects for applicants just above or below neighborhood-specific cutoffs, they use a fuzzy regression discontinuity, with individual school offer dummies as instruments. Results show that favored applicants have no higher reading test scores but also lower math scores and four-year college enrollment. However, the effects do not differ across the more and the less selective schools. Rather than mismatch, the negative effect is due to diversion: exam school offers divert many applicants away from high-performing high schools.

Multilevel regression is an essential technique yet overlooked. Smyth & McArdle (2004) use Bowen & Bok (1998) data on the 28 of the US most selective colleges. While Bowen & Bok’s book found evidence that college selectivity tiers matter, Smyth & McArdle argued and showed that college quality effects are overestimated when unilevel models are used. Their multilevel model includes race dummies, gender, HS grade, SAT-M for level-1 variables and school selectivity (i.e., school SAT) for level-2 random intercepts and slopes. They found no evidence that students matched for level-1 variables are more likely to graduate in Science, Math, or Engineering (SME) at more selective colleges. There is no evidence of selection bias due to not missing at random (fn. 3).

4. Race-blind AA in the U.S.

Since race-based policies are controversial, alternative AAs often based on SES measures have been devised. Some economists early on predicted it would not work, despite URM’s higher likelihood of coming from poor families, because the URM group still make up a minority of low-income students. Research show that race-blind AA promotes less diversity than race-based AA in elite schools.

Long (2016) used a probit regression to determine the accuracy of predicting URM status by using 195 variables correlated with race as a substitute for race variable. Such a model correctly predicts URM status at only 82.3%, a large portion of which is due to the inclusion of only 4 variables: school’s average SAT, student SAT, father income and citizenship. The author concludes that race-based AA cannot be substituted with race-blind AA, since background measures can only predict race with 82% accuracy. Based on Chicago’s Public Schools (CPS) data, Ellison & Pathak (2021) observed that race-neutral AA (including the popular top 10% rule) are less efficient than race-based AA since increasing the share of minorities implies a larger reduction in composite scores (which combine middle school grades, SAT, entrance exam). For instance at Payton, Chicago’s race-neutral policy reduces scores by 1.1 points (on a 100-pt scale) while a racial quota policy would reduce scores by 0.3 points.

Barrow et al. (2020, Table 4) examined Chicago’s place-based affirmative action policy that allocates seats at selective enrollment high schools (SEHS) based on achievement and neighborhood SES. The effect of admission to a selective high school is small and negative on standardized test scores (PLAN and ACT). Their regression discontinuity models estimate that students admitted to SEHS have lower class rank (11 percentiles) than the counterfactual students not admitted to an SEHS, but also lower grade points (0.12). The negative impact is stronger for lowest SES (rank=-16.9; GPA9=-0.29; GPA11=-0.24), than for highest SES students (rank=-9.6; GPA9=-0.09; GPA11=-0.0). The difference in likelihood of high-school graduation is close to zero. However, after graduation, those students admitted to SEHS are on average 2.5% more likely to enroll at any college but 3.7% less likely at any selective college.

Black et al. (2023, Table 3) analyze the impact of the Texas Top Ten Percent (TTP) Rule, a race-blind policy that guarantees admission to the most selective Texas public colleges to anyone in the top 10% of their high school class in Texas, using a DiD method to estimate the graduation rates in selective colleges. Students inside or outside the top 10% are labeled Pulled In and Pushed Out. The TTP led Pulled In students to attend colleges with higher graduation rates (by 4.1%) and math test scores (by 1.9 percentiles) than the colleges they attended before TPP. The TTP did not lower Pushed Out students enrollment rates but led them to shift from UT Austin toward less selective colleges (among them are community colleges). For the Pulled In group, degree attainment within 6 years increased by 3-4% among bachelors and associates but decreased by 0.7% (with large SEs) among bachelors with STEM major. Furthermore, both the Pulled In and Pushed Out students showed small increase in (log) earnings 9-11 years after graduation, but no increase at all 13-15 years after graduation. Black et al. oddly concluded that the TTP was very successful despite the policy having no clear impact on future earnings. On the other hand, the mismatch effect may be rejected in this data.

Based on data from the University of California since 1995, Bleemer (2023) estimated that race-based AA has a large effect on enrollment of URMs but a small effect on lower-income enrollment, whereas race-neutral AA policies such as top 4-percent and top 9-percent and holistic review have low small effects on enrollment of URMs and no positive effect (except a small positive effect for top 4-percent) on lower-income enrollment.

Kurlaender & Grodsky (2013) took advantage of the natural experiment at University of California in 2004 that offered admission to marginal admits (that highly selective campuses originally rejected) through the Guaranteed Transfer Option (GTO) conditional on successfully completing lower-division requirements at a California community college. GTO students have lower GPA and SAT than non-GTO students at either elite or nonelite campuses. Their model includes campus selectivity (Barron’s index), student SAT, race, gender, parent income and education, college major as covariates. When GTO students at elite colleges are compared with traditional admits at elite colleges, GTO students have a lower credits earned, lower GPA, higher dropout but the difference vanishes once covariates are included. When GTO students are compared with traditional admits at nonelite colleges, the results are ambiguous. A robustness check reveals that students who accepted and declined the GTO offer do not differ in their characteristics. The authors conclude that the mismatch hypothesis is (at best) partially true, but a no difference between groups is expected if group differences in background are adjusted. Their samples (GTO, traditional admits at either elite/nonelite campuses) do not differ at all in the percentage of race/ethnic proportion.

4.1. Race-blind AA in the U.K.

Sen (2023, Tables 4-9, A5-A7) obtained data from UCAS (i.e., UK’s university applications service) which covers cohorts between 2001 and 2015. Several universities implemented SES-based AA at different points in time and started to recruit students with lower scores if they are disadvantaged. The two-way DiD model includes a dummy for student who studies at or applies to such universities, student characteristics, and fixed effects of university, cohort. Another is the two-stage DiD model: “This method uses the untreated groups to identify group and period effects in the first stage. In the second stage, it uses the whole sample to identify average treatment effects by comparing the outcomes of treatment and control group after removing the effects identified in the first stage.” (p. 15). A robustness analysis first shows that changes in student disadvantage over time does not predict universities’ likelihood of implementing AA (Table A1). Results from two-way fixed effects indicate that AA lowers the probabilities of achieving first honors and good degree by 6.7% and 7.8%, but also reduces graduation on time by 2.5% and increases dropout by 1.3%. Results from a two-stage DiD display slightly smaller coefficients. Models including interaction of AA*areas with the lowest two quintiles of higher education attainment, reveal that highest quintiles are more negatively affected by AA. Analyses by quintile group of high school test score show that some of the lower quintile groups have worse academic outcomes. When the analysis is conducted separately by fields of study, students pursuing health sciences, social sciences, and humanities, but not STEM, achieve worse academic outcomes, whereas STEM, Social Science, and Humanities graduates are less likely to hold a job that aligns with their subject (fields). Finally, salary is not impacted by AA regardless of fields. Mismatch as well as peer effects, which states that AA reduces the outcomes of other students, are both partially validated.

5. Affirmative action bans.

Backes (2012, Tables 3-4) analyzes public schools using the IPEDS 1990-2009 data. The DiD model includes ban, time-varying state-level controls, fixed effects of school and year. School selectivity is based on SAT. Models accounting for state time trends show that black enrollment decreased at selective schools (1.6%) but very little on average (0.4%). The share of blacks and hispanic graduates at public universities decreased by 1.2% and 1.8%, respectively, at selective schools only, while the share of either black or hispanic graduates decreased by 0.6% on average (but with too large SEs for hispanics). The results can’t be explained by either groups switching from 4-year to 2-year schools or migrating between states.

Arcidiacono et al. (2014, Tables 3, 8-9) examine the impact of allocating minority students to less selective schools, after Prop 209, based on the 1992-2006 data from the University of California (UC). The regression model controls for parental SES and initial major. Control for self-selection on unobservables is done following Dale & Krueger (2014). College selectivity is based on the U.S. News & World Report rankings. They found that the ban leads to an improvement in graduation rates, especially at the lowest and second lowest quartile of academic index (i.e., sum of SAT and high school GPA). They then used a decomposition model to estimate the portion of the gain in graduation rates due to matching (28%), behavioral response (51%) and selection (21%). Bleemer (2020, Table 1, fn. 2) argued that Arcidiacono used an academic index (AI) for UC that differs from UC’s contemporaneous AI. Bleemer replicated Arcidiacono’s coefficients but after changing the AI measure, Bleemer found that both the coefficients for URM and white groups are reduced to nearly zero, indicating no change in outcomes. This controversy is yet to be clarified.

Antonovics & Backes (2013) examined the change in behaviour among URM students after the ban, by using DiD to compare minority-white after and before the implementation of Prop 209. The specification uses dummies of URM and ban status as well as their interaction as independent variables, and dummies of admission and SAT-score sending are used as dependent variables. The admission rate dropped for all URMs irrespective of their credentials, the URMs decreased the number of scores sent to the most selective schools (only two schools were affected) but increased the number of scores sent to less selective schools (it is unclear whether the decline is fully offset by the increase). Since the decline in score-sending rate was very small relative to the drop in admission rate, the authors concluded the ban did not really affect behaviour. Antonovics & Backes (2013) mentioned that earlier studies found large declines in score-sending rate because they didn’t account for the decline in admission rates. The number of URMs who took the SAT in California did not change around the implementation of Prop 209, suggesting no selection bias.

Antonovics & Backes (2014) evaluate how each of the eight UC campuses changed their admission rules after Prop 209, by using a probit regression with students characteristics (e.g., SAT, GPA, parent income and education), URM status, post ban and their interaction as independent variables and admission as dependent variable. The URMs were 40% more likely than equally qualified non-URMs to be admitted but the advantage fell to 11% after the ban. The campuses reacted by decreasing the weight placed on SAT scores and increasing the weight given to high school GPA and family background in determining their admission. The end result is that the URMs’ chance of admission increased by 5-8% relative to whites. When looking (Tables 3 & 4) into the 20% of non-URMs and 20% URMs who were the most affected by this change, i.e., the 20% losers, their chance of admission decreased by between 25-62% for non-URMs and 35-63% for URMs depending on the campuses (except UCR for which the chance is unaffected). The authors then identified these 20% biggest losers: they are the students with very high SAT scores and high parental income/education. Furthermore, the finding of virtually no change in the predicted GPA of likely admits suggests that the ban did not cause an overall decline in student quality. Finally, there is no evidence of selection bias among SAT takers.

Antonovics & Sander (2013, Table 5) tested the hypothesis of a chilling effect in minority yield rates (i.e., a decline in the probability of enrolling conditional on being admitted) after Prop 209. The model uses conditional enrollment as dependent and student/family characteristics, URM, post-ban and their interaction as independent variables. They found that the URM yield rate actually increased at 6 of the 8 UC campuses, by about 3% in general, which is a non-trivial effect. Yield rates were not affected by changes in the selection of URMs who applied to the UC. Such a result is more consistent with a standard signaling model, which posits that a school that offers admission based on their academic credentials increases the value of this signal to future employers because race is not a factor of admission. The yield rate however decreased for the UC system as a whole, but the explanation to this paradox is that if URMs are admitted to a smaller number of UC schools after Prop 209, they are less likely to attend any UC school, but more likely to attend each school to which they are accepted. Students characteristics did not change after Prop 209, suggesting no selection bias based on observables.

Yagan (2016, Table 3) examined how the ban affected black applications to law school, using the EALS data. The probit model includes black race dummy, while controlling for LSAT, GPA, selective attrition on unobserved strength (e.g., valued unobserved credentials like recommendation letters), and year fixed effects. After comparing actual black rate and hypothetical black rate if subjected to pre-ban white admission standards, it was found that black admission rate is reduced post-ban but that rate was still substantially higher than white admission at both Berkeley and UCLA, which are among the most selective schools in the US. This finding indicates some counteracting forces are still at work to favor black admission after the ban.

Hinrichs (2012, Table 3) uses difference-in-difference linear probability models that exploit variation over time and state in affirmative action bans, based on CPS 1995-2003 data and ACS 2005-2007 data for four states (California, Florida, Texas, Washington). Models with time trends show that URMs’ attendance to any college is not impacted negatively and often is either close to zero or with very large standard errors, except for ACS sample with a point estimate of 0.013 and SE=0.007. The impact on having a degree is zero. Hinrichs (2012, Tables 5-6) then uses IPEDS data to estimate the effect of the ban on racial composition. There is almost no effect on 4-year colleges. The most notable effect is a 1.74% decline for blacks and 2.03% decline for hispanics at the top 50 public colleges, off their base value of 5.79% and 7.38%, and a 1.43% increase for Asians and 2.93% increase for whites, off their base value of 22.32% and 62.15%.

Hinrichs (2014, Tables 4 & 6) estimates the graduation rates with a regression including dummy variable of ban, state and year dummies, state-specific linear time trends, by using the IPEDS data between 2002 and 2009. There is a weak positive effect of the ban on four-year as well as six-year college graduation rates for both hispanic or black students. The strongest effect was an increase of 2.36% (off their base of 66.65%) in six-year graduation for Hispanics attending public universities in the top two tiers of the U.S. News rankings. The corresponding effect for Blacks is an increase of 1.4% (off their base of 61.44%). The share of Blacks in four-year colleges who get a degree within six years falls by about 1% in the top two tiers of the U.S. News rankings, while the share of Hispanics falls by a bit less. It is unknown whether the results are due to the changing composition of students at selective colleges.

Hinrichs (2020, Table 4) examined the impact of affirmative action ban across US states on state racial segregation, by using a regression with ban dummy, state dummies, year dummies, state-specific linear time trends as independent variables, for the time period 1995-2016, 1995-2003, 2004-2016. Segregation is measured by White exposure to Black, vice-versa, as well as Black-White dissimilarity. The overall effect is very small, but there is heterogeneity across time periods. The affirmative action bans of 1995-2003 are associated with less segregation, whereas the bans of 2004-2016 are associated with more segregation (but the effect appears to be a continuation of a trend that begins two years before the ban).

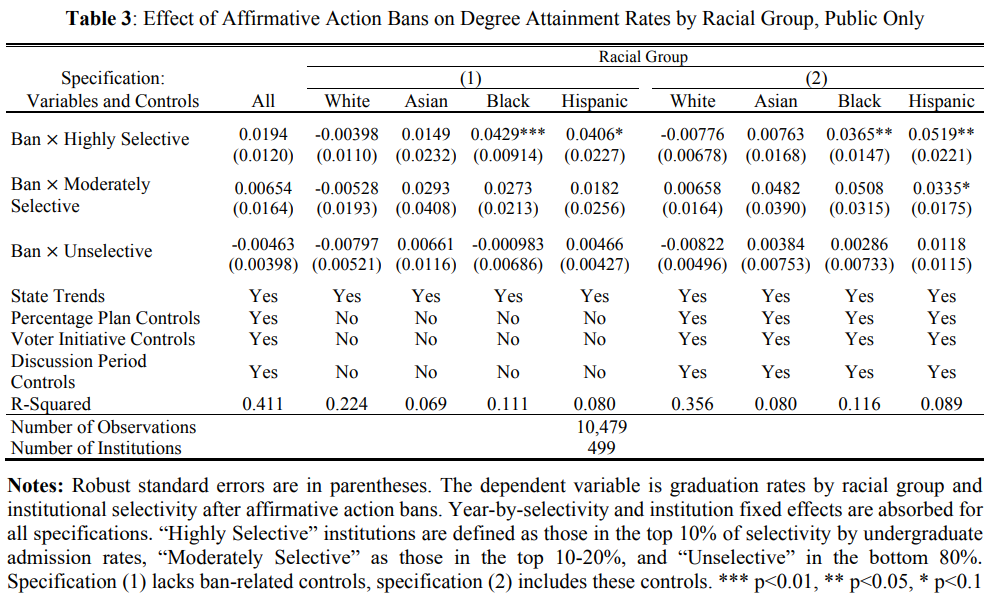

Ren (2020) examined the IPEDS data on public colleges, and used a difference-in-difference model with graduation rates as dependent variable and ban-by-selectivity and year-by-selectivity, linear state-level graduation rate trends as predictors. When ban-related controls are included, ban predicts an increase in graduation rates of 3.7% for blacks and 5.2% for hispanics at highly selective colleges (coefficients are significant), and 5.1% for blacks and 3.3% for hispanics for moderately selective colleges (coefficients have very large standard errors). There is a strong heterogeneity of effect: most majors aren’t affected by the ban but blacks and hispanics have increased graduation rates after the ban for STEM and social science majors (Table 4). A synthetic control model is then used as counterfactual to determine the change in the treated units (i.e., graduation rates) in these states if the ban actually did not happen. The result shows that bans increased minority (but not non-minorities) graduation rates at highly selective colleges. The finding that minority graduation increases is indicative that mismatch effects rather than college quality effects dominate.

Lutz et al. (2019) use the NELS (cohort: 1992-2000) and ELS (cohort: 2006-2012) to evaluate the impact of AA ban on GPA and graduation in selective colleges. Models include parent SES, HSGPA, AP courses in high school, collegiate experiences, race dummy and interaction with AA ban state. The graduation models show that URMs have similar (or higher) chance of graduating compared to whites in the NELS (or ELS) and the interaction with AA ban state is positive (or negative) in the NELS (or ELS) but not significant in both datasets. The GPA models are uninformative due to the coefficients having large SEs and having quite different values and direction depending on the datasets. Selection bias is not addressed.

An important argument made for AA is that it needs not be permanent. AA plans can be discontinued once they transformed employers’ attitudes. Myers (2007, Table 5) analyzed the Current Population Survey from 1994-2001 to gauge the impact of Prop 209 on the labor force when its impact came into effect in 1999. Myers uses a triple-difference model with year dummy, California state dummy, minority group dummy and their interaction, along with covariates such as age, marital status, education attainment, central city location, nativity, citizenship. Probit models of employment and non-participation indicate that nearly all URM by gender groups show a decline in employment, which is almost fully accounted for by a decline in non-participation. The pattern was nearly identical whether the 1995-1999 or 1995-2001 period was analyzed. Wage regressions indicate that the coefficients were close to zero or negative but with large standard errors. A safe conclusion is that AA either failed to achieve its goal or needs to be permanent.

6. Differential effect of AA in STEM?

It was argued (e.g., by Arcidiacono) that one other reason why mismatch effects are inconsistent is because its impact is larger in STEM due to being more cognitively demanding than other fields. The results are not always consistent yet many studies (mentioned above) found a differential effect.

Arcidiacono et al. (2016) use counterfactual models to simulate the change in graduation rate in STEM for minorities and non-minorities if they attended one of the bottom two campuses (Santa Cruz and Riverside) instead of the top two campuses (Berkeley and UCLA) after the ban, Prop 209, in California. Control for self-selection on unobservables is done following Dale & Krueger (2014). If the students stayed in STEM field, the graduation rate showed a non-trivial increase for both minorities and non-minorities, the exception being the highest levels (quartiles) of academic preparation showing a decrease in graduation at Santa Cruz and no change at Riverside among non-minorities. The authors then use counterfactuals again to simulate how the change in base assignment rules affects graduation of minority and non-minority when the opposite group’s assignment rules are used. Minorities and non-minorities experienced a modest increase and decrease, respectively, in graduation rates if they stayed in STEM field. Overall these findings show that less (more) selective campuses give an advantage in graduation for less (more) prepared students and minority students in STEM.

Bleemer (2022, Tables 3-4) evaluates the impact of Prop 209 in California on the outcomes of URMs and non-URMs, by using a DiD approach with URM, URM*Prop209 interaction, and fixed effects of high school and academic ability (weighted average of GPA and SAT). The AA ban indeed lowered URMs’ probability of earning bachelor degree and graduate degree (estimates are imprecise, as the SEs are very large) but this negative trend is mainly driven by the bottom quartile of ability. Moreover, the URMs’ probability of getting a STEM degree after the ban decreased by 1.23% (SE=0.65) at the bottom quartile but improved by 0.81% (SE=0.96) at the top quartile of ability. Finally, the AA ban reduced black and hispanic log of wage by 0.03 and 0.05 for both the 6-16 years and 12-16 years after UC application, a rather small effect for the black group. Main analyses are robust to adding more covariates, indicating no selection bias on observables that could affect application deterrence. To explain why these results contradict Arcidiacono et al. (2016) on STEM, Bleemer (2020, pp. 4-5) argued that the two studies used different observations but also different definitions of nonscience major.

Hill (2017, Tables 4, 7, 9) examines STEM degree completions among minorities 5 years after the ban in a given US state (California, Florida, Texas, Washington), using the IPEDS data. The DiD model involves interaction effects of ban dummy with different levels of selectivity (highly, moderately, non selective), along with fixed effects of institution and year by college selectivity (i.e., year * each level of selectivity) and linear state trend. For STEM programs at highly selective public colleges, the share of URM falls by 1.64% and 2.04%, when not including and including top-x percent plan as covariate, respectively, relative to the mean of 16.15%. For non-STEM programs at highly selective colleges, the share of URM falls by 3.53% and 3.05% relative to the mean of 22.09%. The coefficients for moderately and non-selective public colleges were close to zero (SEs were also very large). The larger effect in non-STEM indicates that mismatch effect is slightly less evident in less challenging non-STEM programs. The estimates for STEM may be upwardly biased because, after the ban, there is an increase in the number of students of unknown race completing STEM degrees. This is because minorities no longer have an incentive to report their race after AA bans. Although the mismatch is validated here, the effect might be small.

Mickey-Pabello (2023, Tables 5-7) uses a more representative dataset that is composed of the IPEDS and CPS from 1991 to 2016 to evaluate the impact of the ban on URM students across school selectivity (measured with college GPA or Barron’s index). STEM is categorized using 2 different taxonomies. A multilevel regression, controlling for ban status, time-varying state characteristics (e.g., racial demographics, education, unemployment rate), institutional characteristics (e.g., enrollment size, % of students receiving financial aid, institutional selectivity, cost of tuition), linear year, state dummy*linear year interactions, with colleges and states as levels and state and year fixed effects, is used to predict the proportion of STEM or non-STEM degrees. Public schools show a decline in STEM degrees using either taxonomy while private schools show a decline or decrease depending on which taxonomy. The absolute values of the decline in STEM and non-STEM degrees for either public or private schools are small-to-modest. The decline in STEM and non-STEM degrees is stronger over time, generally reaching 1% at 6-year or 9-year post ban period for public and private schools with the exception of STEM private schools. There is one instance of small/modest increase in STEM degrees for public and private schools of any selectivity, highly and less selective, but only when the time period is restricted to 1991-2009.

Black et al. (2023), as discussed earlier, found that the Top 10% policy increases degree attainment within 6 years among bachelors from any institution but not among bachelors with STEM major.

One finding that is not disputed is the higher odds among minorities of switching out of STEM, but it isn’t related to group differences in cognitive ability. Riegle-Crumb et al. (2019, Table 1) calculate the probability of switching out of STEM major for blacks and hispanics compared to whites in the BPS data. Before and after controlling for academic preparation (SAT, HSGPA, advanced math and science course-taking), social background and institutional characteristics, blacks have an increased likelihood of 0.194 and 0.187 while hispanics have an increased likelihood of 0.126 and 0.098 of switching out. It is still possible that differences in college readiness explains the gap in switching out.

7. Reinstatement of Affirmative Action.

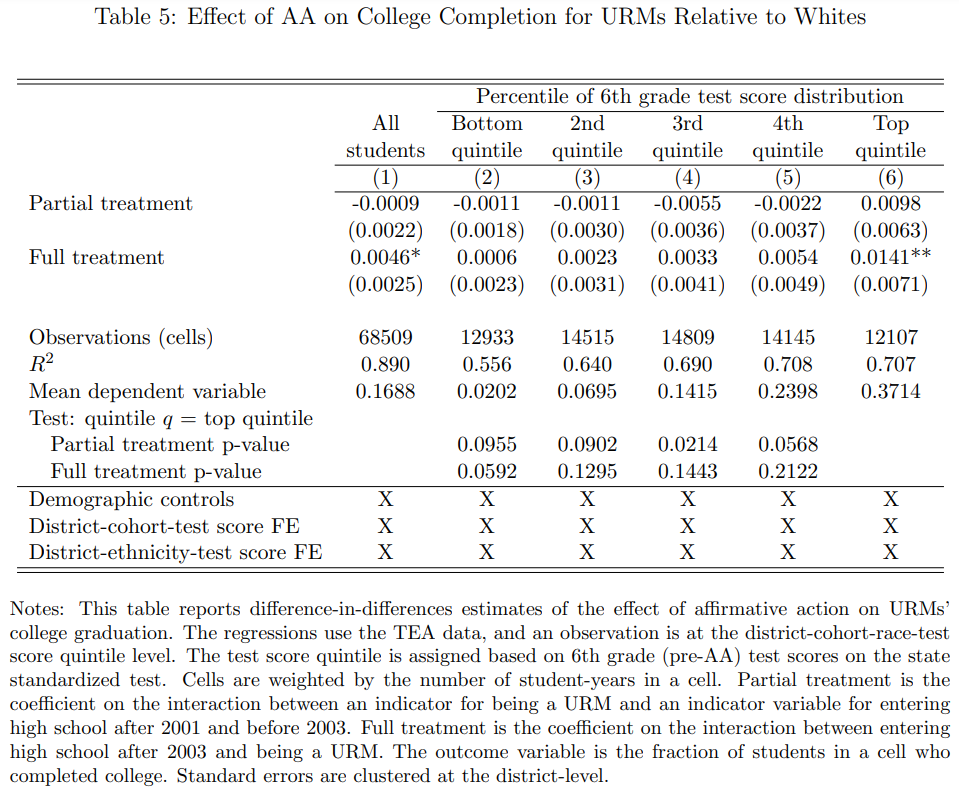

Akhtari et al. (2020, Table 5) studied the impact of the reinstatement of affirmative action in three US states (Texas, Louisiana, and Mississippi) on SAT-Math, SAT-Verbal, college application, attendance and completion. The DiD model with state, year and race fixed effects, shows no impact on SAT-V and a trivial increase in SAT-M by 8 points for URMs and 4 points for Whites. A triple-differences model estimates that URM improved over whites by 4 points in SAT-M, equivalent of 0.035 SD. The DiD model with URM * partially treated and URM * fully treated cohorts interactions, as well as fixed effects of state, race and test scores, shows that the fully treated URMs who belong to the 3rd to top (but not the lowest) quintiles of grade score increased their application rates relative to whites. The DiD model with URM * fully treated cohort, along with school-cohort and race-specific fixed effects and lagged values of grade, shows that school grade URM-white gap reduced by 0.9 points, equivalent to 0.1 SD. The DiD model with URM * fully treated cohort, and fixed effects (district, cohort, test, ethnicity), shows a gain of 0.22% and 0.15% in 10th and 11th grade attendance for URMs over whites. The DiD model with URM * partially and URM * fully treated cohorts, as well as the prior fixed effects, shows that URMs did not improve their college completion rate over whites, except for the top quintile of grade score among fully treated cohorts, by a meager 1.4%. The authors astonishingly concluded the reinstatement was a success despite the data telling otherwise.

8. Affirmative Action against Asians.

Arcidiacono et al. (2022a, Table 6) observed how Harvard admission penalizes Asians, contrasting with their racial preferences in personal(ity) rating favoring blacks and hispanics, using data collected from classes of 2014-2019. Despite Asians having better academic ratings than whites, they are admitted at much lower rates than whites when controlling for academic ability (weighted average of SAT and GPA). The major reason is because personal(ity) rating is strongly related with admission and Asians score by far the lowest among all racial/ethnic groups in this vaguely defined personality scale used by Harvard. The authors simulated the Asian probability of admission if they were treated like white applicants, by using the log odds formula, and found a non-trivial increase in the odds. On average, Asians suffer a penalty of -1.02% points off their base admit rate of 5.19% due to Harvard’s policy. There is a large heterogeneity in the effects: being Asian male or/and not disadvantaged is associated with even greater probability of being admitted if treated equally.

Arcidiacono et al. (2022b, Table 11; note: two of their columns are mistakenly swapped) calculate the average probability of admission rates based on academic ability but absent racial preferences, by setting race coefficients (main and interaction effects) to zero. In this case the number of admits for blacks in Harvard, out-of-state UNC, in-state UNC, drops by 72.1%, 87.7%, 35.5%. The number of hispanic admits drops by 51.1%, 59.5%, 17.6%. On the other hand, the number of Asian admits increases by 39.7% at Harvard, 20.8% at out-of-state UNC, and 4.6% at in-state UNC.

Bleemer (2022, Table 6) uses DiD with Asian dummy and Asian*Prop209 interaction but the ban did not cause Asians to enroll more in the most selective campuses yet they are more likely to enroll in the least selective ones.

Grossman et al. (2023, Table 1) use a large dataset composed of 685,709 applications to a subset of selective U.S. institutions in the 2015-2016 through the 2019-2020 application cycles. Results from a logistic regression, controlling for SAT/ACT, GPA, extracurricular activities, gender and family characteristics, show that east Asian and south Asian groups have, respectively, 17% and 48% lower odds of college admission relative to whites. The difference is reduced a bit once location is also added as covariate.

9.1. Affirmative action in Brazil.

Francis & Tannuri-Pianto (2012, Table 7) looked at the 2004 quota policy adopted by the University of Brazil. They focused on skin color group differences since darker skinned people have lower SES. Their DiD model includes SES variables, entrance exam (called vestibular), subject area, self-reported brown-skinned and dark-skinned groups (pardo and preto) main and interaction effects with postquota period (light-skin or branco serves as reference skin color). The GPA model equation shows that the coefficients of postquota * pardo/preto are small (with very large SEs), indicating that AA did not affect GPA gap between skin color groups, although the coefficients for pardo and preto indicate modest group differences but only among selective schools. An alternative model with skin color quintile (measured by photo ratings) and postquota interaction shows these coefficients are positive but very imprecise as evidenced by their very large SEs. A model using two measures of effort as dependent variable, the number of times that an applicant took the vestibular exam and whether an applicant took a private course to prepare for the vestibular, shows that pardo (i.e., brown skin) and the lightest skin quintile groups were more likely to take a private course after the policy but these results are ambiguous due to lack of agreement between skin color measures. A final analysis which uses self-reported negro race as dependent variable, shows that some groups (dark-skinned and darkest quintile) are more likely to self-report as negro postquota compared to prequota period. This study therefore is not robust.

Otero et al. (2021) analyze the brazilian centralized admission system in 2009-2015. They use counterfactual models to simulate the 2012 policy which requires schools to reserve 50% of seats to targeted students (low-income african or indigenous descent) and a situation with no AA at all. Under the AA policy, cutoffs (i.e., selectivity) are higher for open spots than reserved ones (for targeted students), but under no AA the distribution of cutoffs for all students is much closer to the distribution for open seats under AA (Figures 9 & A9). Counterfactuals are then used to estimate the predicted future income with and without AA over the exam test score distribution. They account for selection into degrees based on unobservable “preferences” (defined as potential outcome gains). Results show that only the high score students are affected, positively among targeted and slightly negatively among non-targeted students; on average, there is an income gain of 1.16% for targeted students and income loss of 0.93% for non-targeted students.

Valente & Berry (2017) compares the performance of students on the national exam (ENADE) from 2009 to 2012 based on whether they are admitted through AA or traditional means. They use OLS regressions, each for public and private universities, with independent variables such as AA, racial quota, social quota, nonwhite, female, age, income, public high school, parent education, including dummies for majors and time fixed effects. For public universities, racial quota is associated with an exam score decline of 1.2 points (on a 100-pt scale). For private universities, racial quota is associated with an exam score increase of 3.8 points. The reason for the difference might be that private universities require quota students to have high scores to continue receiving the scholarship.

Assunção & Ferman (2015, Table 4) examined the 2003-2004 admission process in Rio de Janeiro, using the SAEB survey data. The law in 2002 (2003) required that 50% (20%) of admitted had to have attended public schools and 40% (20%) of admitted had to be black. This causes a drastic change in the student composition because the percentage of students in medicine and law schools which were blacks in the 2002 admission was actually close to zero. The DiD model, in which the treatment group is composed of states without quotas, consists of student and school characteristics as controls. The dependent variable, student proficiency, is measured through math or portuguese exam and is calibrated using IRT to make it comparable across time. The coefficient of treatment effect*2003 for blacks in public schools in Rio de Janeiro was -0.267, indicating that blacks had 0.267 SD lower exam scores in 2003 owing to the introduction of the quota. Results from private schools or other groups (mulatto, white) or another state with quota (i.e., Bahia) were not significant due to large standard errors. A robustness check shows that favored 8th grade are not differentially affected by the policy compared to favored 11th grade students between 2001 and 2003 after adjusting for state-level variables (Table 6). Another robustness check revealed that there was no negative trend in favored students’ proficiency between 1999 and 2001 (i.e., prior to the quota system), that the treatment effect did not change the probability of self-reporting as black and did not change the observable characteristics of the treated group.

Estevan et al. (2019, Tables 4 & 9) examine the 2004 policy adopted by UNICAMP, a highly ranked public college. UNICAMP awarded a large 30-point bonus (equal to 0.30 SD) in admission exam score for students from disadvantaged backgrounds and an additional 10 points for ethnic visible minorities, while private schools did not. A DiD model with AA, public high schools and visible minorities (black, mulatto, or native) main and interaction effects, while controlling for students characteristics (such as ENEM, i.e., high school exam scores), municipality, major choice, year fixed effects, shows that AA increased the admission rate of public school applicants, and also increased the white-minority gap in admission rate for private schools but not for public schools. The displacing and displaced students did not differ in their ENEM scores as a result of AA. A potential confounding comes from exam-preparation effort changes due to AA. Effort is measured by public-private school difference in admission exam scores, conditioning on ENEM scores. Results show no public-private performance gap. When this analysis is repeated for each four quartiles of ENEM score, it was found that public applicants improved their performance relative to private applicants only at the bottom quartile, but the change may not be large enough to affect their actual acceptance rate. A final analysis shows that the public-private performance gap after AA is not affected by their distance away from the cutoff, suggesting no differential efforts.

Oliveira et al. (2023, Table 3) use administrative data to compare quota and non-quota students enrolled at UFBA between 2003 and 2006. Their DiD model includes a dummy for cohorts affected by the policy, student characteristics, major and cohort fixed effects. Then, a triple-difference model is used to identify treatment effects for potential quota students who were able to enroll only due to the policy and for students who would have been admitted even without the policy. Quota students in technology majors are less likely to graduate, even after controlling for entry exam score, but this effect is entirely driven by students who would not have been admitted without quotas. Furthermore, students (who would not have been admitted without quotas) do not have worse outcomes in terms of failure rates or graduation rates in health sciences (regardless of exam score controls) or social sciences and humanities (after exam score controls). When sample is restricted to students who graduated, and controlling for exam score, quota students did not underperform in health sciences or social sciences and humanities, however with respect to technology major the quota students (who would not have been admitted without quotas) had lower GPA level in first semester and an even lower GPA level at the end of the major. The authors surprisingly concluded that the AA policy was a success despite the proof of contrary. The supposed catch-up effect (i.e., the gap in GPA decreases at the end of the major) is only apparent for the whole quota group but not for quota students who would not have been admitted without the policy with respect to technology major.