It goes without saying that multiple regression is one of most popular and applied statistical methods. Thus, it would be odd if most practitioners among scientists and researchers do not understand and misapply it. And yet, this provocative conclusion seems most likely.

Because a simple bivariate correlation does not disentangle confounding effects, the multiple regression is said to be preferred. The technique attempts to evaluate the strength of an independent (predictor) variable in the prediction of an outcome (dependent) variable, when controlling, i.e., holding constant, every other variables entered (included) as independent variables into the regression model, either progressively step by step or altogether at the same time. The rationale is to get the effect of an independent variable that only belongs to it. But this is a fallacy.

And this fallacy has (at least?) 2 variants.

1. If predictor x1 has a greater regression coefficient than predictor x2, then x1 is the best predictor of the outcome (dependent) variable Y.

2. If x1 is correlated with Y but its effect disappears (or weakens) with the inclusion of x2, and x2 still being correlated with Y, it follows that x1 is mediated by x2.

On the other hand, the following statement is correct.

3. If the addition of a new variable x3 (entered in a new step) does not add to the prediction of the dependent variable, either looking at the (misleading) r² or the regression coefficient of x3, then x3 is said to have no independent or direct effect.

Given the above statements, we understand how the sum up that appears in virtually all articles that make use of multiple regression is misleading :

4. Variable x1, controlling for x2 and x3, has a large/weak/null effect on variable Y.

Let’s remember that multiple regression is used to test either one of the two hypotheses :

a. To decide which predictor is the strongest among all other included (independent) variables.

b. To determine if the predictor variable(s) we are interested in has (have) an independent effect on the outcome (dependent) variable.

While “b” can be tested, the “a” hypothesis cannot. At least, not with the classical method that uses cross-sectional data. As Cole & Maxwell (2003) informed, regression with longitudinal data is the only way to prove the causality and by the same token to disentangle the pattern of direct and indirect effects. This is because causality deals with changes, that is, the impact of the changes in variables x1 and x2 on the changes in variable Y. But changes is only possible with repeated measures and only if the variables are allowed to change meaningfully over time, which means that a variable such as education level (among adults) should never be used because of its nature of being completely invariant over time. If little care is given to time variability, we go under the problem of spurious causality effects that may arise from unequal reliability. That’s why Rogosa (1980) emphasizes the importance of equal stability-reliability. Suppose a model with variables X1, X2, Y1, Y2, and X1 correlated with Y1, and both predicting the two variables (X and Y) at time #2. If X1->Y2 has equal path correlation with Y1->X2, this can be coherent with an hypothesis of equal mutual causation. But also with unequal causation effects, if the stability (correlation of X1 with X2 or Y1 with Y2) differs between X and Y. Obviously, the variable having more stability will have its causal path under-estimated. Latent variable approach attenuates the underlying problem somewhat but does not rid off this problem in all instances.

In the field of psychometrics and psychology, it is the Structural Equation Model (SEM, available in R, AMOS, LISREL, Mplus, Stata, etc.) the commonly used method, in conjunction with repeated measures taken in different points in time. In the field of econometrics, it is the Error Correction Model (ECM, available in R, or Stata) that is commonly used to determine causality, in conjunction with time series. Because I don’t see how I can help to illustrate with ECM and since I have never practiced, I suggest instead to read Michael Murray (1994), Smith & Harrison (1995), Robin Best (2008), for an introduction. And now I will focuse on SEM to illustrate the problem of the “hidden” indirect effect in the classical regression methods.

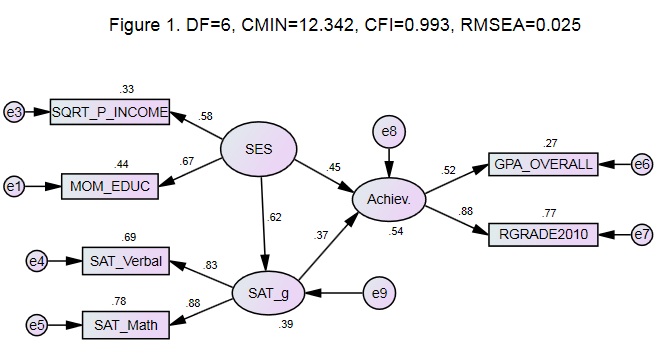

Here, I show 3 equivalent SEM models (data from NLSY97) taken from the worthless analysis I did before. They differ in their theoretical (conceptual) sense but not in their mathematical sense (Tomarken & Waller, 2003, p. 580). That’s why they all have the same path coefficients and model fit.

In figures 1 and 2, we have illustrated two different patterns of causation, although in the conceptual sense only, and in figure 3, we have defined the independent variables to be correlated but the causal path has not been made explicit and this is why the arrow is a double-headed one. In figures 1 and 2, we assume we know the causational pathway whereas in figure 3 we assume we don’t know anything. How to calculate the total effect ? As explained before, the indirect paths must be multiplied, and then added to the direct path. In figure 1, the total effect of SES and SAT is 0.62*0.37+0.45=0.679 and 0.37. In figure 2, the total effect of SES and SAT is 0.45 and 0.62*0.45+0.37=0.649. We see already the dramatic consequences of not knowing the widespread problem of equivalent models (MacCallum et al., 1993). In figure 3, there is no decomposition, thus no indirect effect. The figure 3, in fact, illustrates what really happens in multiple regressions.

To demonstrate more effectively that multiple regression (MR) does not really estimate the full effects of the independent var., I will use real data, specifically the Osborne (1980) twin data that C.V. Dolan provided me a while ago. It’s available here. I use the same regression modeling, in both SPSS and AMOS, to show the similarity of the correlations. Usually, SEM softwares attempt to deal with missing data with procedure such as maximum likelihood whereas in multiple regression what is traditionally used is the listwise deletion. But since the IQ data I am using do not have empty cells but have 5 missing values at -99.00 they must be configured this way in SPSS before running the analysis. The path correlations will not be differentially affected by methods. And here, we have a clear demonstration that what the Beta coefficient estimates in MR is nothing more than the portion that was labeled “direct” path in SEM.

In the classical regression, indeed, the common variance that has been removed to estimate for each predictors their independent effect is just a tangle of correlations. And it is not possible to make sense of them. For this reason, SEM regression used in conjunction with repeated measures is capable to estimate the full effect of each variable as well as the nature of the indirect effects. The examples presented in figures 1-3 are no more no less than fake causation models. That is, they are causational only in a conceptual sense.

More often than not, SEM is used with cross-sectional data. In such cases, the indirect effects among the predictors are no more no less than a massive tangle of correlations. As the above equivalent models make crystal clear, the equality in fit and in path correlations proves that none of these models is depicted as to be the best approximation (i.e., fit) to the data. And yet it is not uncommon (at least, for me) to see researchers in their presentation and even academic papers making unjustified claims regarding the causal path. That is, if someone proposes in the introduction of the article that motivation correlates with scholastic achievement only through its effect on IQ, then he expects to see full mediation by IQ, and no independent effect of motivation. Then, he will draw the path diagram as depicted in Figure 1 or 2 above, and estimate the parameter estimates and model fit. To arrive to such conclusion only means that to prove the nature of the causational pathway, we just need to draw a path from motivation->IQ->achievement instead of IQ->motivation->achievement. Yes, just by drawing a simple arrow. This can’t be serious. And yet I remember many instances like the outlined example above. The dilemma of equivalent models could have been attenuated by longitudinal SEM procedure. When the absence of longitudinal data renders impossible to empirically test the different plausible causational pathways, the only way we can reject one pattern of causation is to advance a theory. For example, if I explain conclusively why children test score cannot cause parental SES, then on a purely theoretical grounds, I manage to reject the plausibility of this causational pathway. Another attempt to circumvent this dilemma is to use non-repeated measures at different points in time with the suggestion that because 3 variables, X (independent var. at time 1), M (mediator var. at time 2) and Y (dependent var. at time 3) are measured at different times, one may make assumptions about the causality, e.g., X measured earlier than Y cannot be caused by Y. The problem with SEM modeling assumption using cross-sectional data becomes salient when the same variables are measured at still different times (X at time 3, M at time 1, Y at time 2) when these variables may have similar correlations. In most instances, anyway, I don’t see the authors trying to explain why they select the causational model they have shown in their figures. Even if they can, it resolves only a part of the whole problem. As Cole & Maxwell (2003) argued, a true mediational effect is a causational one, and to achieve this state is impossible without the use of repeated measures over time. Therefore, any selected causational path unavoidably depicts a mediation that is entirely static in nature. Because a mediation implies several steps, the time must elapse between the measures. SEM does not (and cannot) atone for methodological flaws for the same reason there is no way to make sense of a nonsense.

Thus, in classical regression models, we are left with a big dark hole. That is, the uncertainty about the indirect effects. Then, if the effect of x1 is not moderated the least by any other independent var., e.g., x2 and/or x3, we can safely make the conclusion that the total effect of x1 is only composed of direct (i.e., independent) effect. If, on the contrary, x1 is moderated by x2 and/or x3, then there are 4 possibilities :

A. x1 causes x2.

B. x2 causes x1.

C. each variable causes the other (but not necessarily to the same extent).

D. x1 and x2 are caused by another, omitted variable.

Thus, if x1 is moderated by x2, and if x1 causes x2 (partially or completely), it would follow that x1 has an effect on Y both directly and indirectly (i.e., through its effect acting on x2). The indirect effect is the portion removed in the regression coefficients. The portion of the total effect that is removed can be said to fall into the “unknown area” where none of the 4 possibilities outlined above is made clear. Regression cannot disentangle these confounded effects. Thus, when we make the assumption that x1 is a stronger predictor than x2, the statement makes sense only if we know everything about the certainty and uncertainty areas, and therefore, the full effect of each variables. In light of this, the independent variables cannot be said to be comparable among them, even if they are expressed in standardized coefficients. The predictors are certainly not competitors, despite researchers fallaciously interpreting them this way. What is even more insidious is that the direct and indirect effects can be of opposite signs (Xinshu, 2010). Thus, in multiple regression, not only it is impossible to judge the total effect of any variables but also it is impossible to judge the direction of the correlation.

Obviously, we can attempt to go around this by stating that we only compare the relative strength of the direct effects of the variables. So far so good. The problem is that the researchers never meant it this way but, instead, interpret the regression coefficients as the full effect of the independent variables, when all others are held constant. By this, and to repeat, it follows that the following statement is highly misleading :

4. Variable x1, controlling for x2 and x3, has a large/weak/null effect on variable Y.

By variable, what is meant conventionally is its full effect, not its partial effect. That’s why the statement is a complete nonsense. Consider the case of a variable x1 that has a strong effect on Y but all of its effect is indirect (given the set of other predictors). Then, its regression coefficient will be necessarily zero. On the other hand, x2 can have a small total effect on Y but all of its effect is direct (given the set of other predictors). Then, its regression coefficient will be non-zero. Thus, it follows that the strength of the regression coefficient depends solely on the strength of the direct effect of the independent variables. The decomposition of the full effect into its direct and indirect effect, as can be nicely modeled in SEM, is useful if we need to understand the nature of the mechanisms at work behind the correlation-causation. But when we draw a global picture about the full “unbiased” impact of the considered variables, it goes awry and ugly.

Hence, the correct statement in 4) should have been :

4a. The portion of variable x1 that is not correlated with x2 and x3 has a large/weak/null effect on variable Y.

Another way to express exactly the same idea is :

4b. The direct effect of x1, ignoring its indirect effect through x2 and x3, is large/weak/null on variable Y.

If we goes on to say that, at the very least, the multiple regression is still helpful in comparing the direct effects of the predictors, this is no less a clownish idea. The real world is so highly complex that it is impossible to find any variable at all that has absolutely no indirect effect. Such thing is possible only if anything at all in this world is not correlated nor is the product of anything else at all. Multiple regression has no meaning if the actual purpose of the researcher(s) is to compare the relative impact of the independent effect of the predictor variables.

Therefore, it must follow that any article making the claim n°4 is faulted. Any article that attempts to compare the relative strength of the independent variables has already lost the battle even before the analysis has started.

In light of what we have said, we understand that multiple regression should be used to test whether any variable can be weakened in its direct effect by other independent variables but not to test between them as if they were in competition with each other.

How and why many practioners don’t understand it at all is puzzling. To be honest, I have made mistakes of the same nature before, as this can be seen in some of my earlier blog posts, almost one year ago, at a time when I was beginning to use regressions and my comprehension was not excellent. Even though I used to believe the opposite. Indeed, I was thinking that claim n°4 was right. On the other hand, I consider this not to be troublesome for two reasons. I am not a statistician. It’s not my field of expertise. And worse, I am not a scientist at all. I don’t even want to call myself a researcher. Unlike me, the people who submit the publications and those who review them do not have any excuse at all.

The more I learn about stats, the more disappointed I am about most research papers. This is why, in situation like this, I hoped I would stayed a complete ignorant. Too late. My mental health has been seriously endangered now. But there is worse to my mind. My expectation is that even the statisticians are not necessarily aware of this problem. If they were, it is impossible they remain silent. No, they will voice against the misuses of regressions. And we won’t see such fallacies today. And yet…

Discover more from Human Varieties

Subscribe to get the latest posts sent to your email.

I think you’re paying too much attention to the imperfections of individual studies. Multiple regression or SEM with cross-sectional data can be fine if you’re using them in a confirmatory manner, as a part of research program. I would think that researchers generally see the results of a particular MR or SEM analysis as confirming their hypothesis not just because of that particular analysis but because the results fit together with previous theory and evidence. Of course, in many cases that underlying body of theory and evidence is as mushy as the individual MR or SEM analysis, but that’s another matter.

My blog post here was far from being perfect (perhaps I will add a little something later). Indeed, I have forgotten to mention one very crucial point. Because when I think about them reading my article, I easily imagine them saying in their papers “we avoid model equivalency problems mentioned by [me] by using variables X measured in 2010, M measured in 2011 and Y measured in 2012 because measures taken in 2012 cannot cause measures taken in 2010. Thus our findings is robust to equivalent model, and we manage to confirm our preferred causational pathway model as theoretically reasonable”. Well, at first glance, that sounds right. But no. That’s another illustration of their idiocy. I bet you if you measure X at 2012, M in 2010, Y in 2011, you’ll have the same correlations, and yet a different assumption regarding your SEM modeling. Thus, the entire strategy fails badly. The only way to get your full effects properly is by way of SEM+longitudinal data. There is no other way. Causality must deal with changes (over time). A “static mediation” like what happens when they use cross-sectional data is nonsense. Theory does not transform correlation into causation. You cannot make a valid theory regarding mediation when using cross-sectional data.

I have already emailed a lot of researchers, and I’m just continuing right now. And they continue to ignore me. Worse, they don’t even click on the link I suggest them, because if they did, I must have some stats on my personal blog, while actually the hits in my blog is not significantly different from zero. I already knew that all along, but now they confirm what I thought about them from the very beginning. Scientists are despicable and pathetic.

If things continue this way, you can trust me. I will write a new version of this article and submit it on a scientific journal. The way they decide to ignore me is not acceptable. I’ll not let it pass. Most papers turn out to be rubbish. For SEM, it’s even worse than for regressions. Remember my previous post on SEM ? I already knew at that time all this problem about model equivalency (see Tomarken & Waller 2003). In fact, when i was beginning to use SEM, I already detected this problem even before I come to read MacCallum and Tomarken/Waller. It took me several minutes. Yes, only a few minutes. It’s easy to see that when you reverse the causational paths, both model fit and parameters don’t change. I tried diverse data, changed the direction of the paths, but everytime i try, everything is similar. Nothing changed. Now, when you change the dependent var. and the independent var., you will probably see that the path correlations will change as well. Not surprising since you’ve changed the regressions. But what about model fit ? Trust me, it will not change. Thus, when you read in most papers (I will not cite the authors) that processing speed causes divergent thinking which causes school achievement, you understand how stupid this procedure is, because if they reverse the paths they will obtain the same correlations. It’s worthless to try to get your full effect, by multiplying the paths speed-thinking*thinking-school and add the path speed-school because the total effect of each predictor will be different depending on the model you choose. Another reason why this whole enterprise is nonsense is because they have not controlled for previous levels of speed or divergent thinking or school achievement. If they did, in most instances, you’ll certainly see your paths will be much lower. It’s because, like I said, a causation deals with changes. Thus the previous levels of all of your variables must be held constant. There is no other way.

You never see studies like that. Now, I understand that most (99.99%) of what I have read is rubbish. The only place they deserve to go is -> trash. I would like to guess how many millions of money they have wasted in doing worthless studies. I don’t know if I should continue to read what they do. My time is short and precious. Psychometrics, psychology, and to a lesser extent economics, it’s all the same. Problems with interpreting significance tests, problems with r², problems with interpreting regression coefficients, SEM, etc. That’s too much for me. As you know, I have learned all this alone. Since I’m not a scientist and worse, I am not intelligent, it took me a lot of my time to understand stats. It was hell and damnation for me. Thus my disappointment is even greater considering the amount of time I have wasted. For nothing.

I know it’s difficult for you to trust me, since I have no qualification in this field. The safest and best move is always to side with the majority. When a non-expert attempts to challenge an expert, in 99.999999% of cases, this guy is a silly clown. In only 0.000001% of cases the guy in question is a genius. I prefer not to think I’m a genius, because that’s not true, since I really know who I am in real life. But even if I understand the skepticism, I do not appreciate when it works like an argument from authority.

To be sure that any readers here will not be doubtful, it’s more straightforward to demonstrate the similarity in MR and SEM. Thus I have added a paragraph + 2 pictures. Now, everything is definitely settled. I have won. They have lost. It’s the end of this pathetic story.

I meant to comment on this earlier, but, generally speaking, even longitudinal measurements aren’t necessarily enough to prove causation. There could be some process upstream causing the two variables in question to change over time in tandem.

Case in point, trying look at MZ twins and trying to correlate some life event with some outcome of interest. The idea being that MZ twins control for genetics, so this would be proof that the life event in question causes the outcome of interest.

Of course, this doesn’t actually follow. The problem with this approach: MZ twins aren’t actually genetically identical, and they are certainly not phenotypically identical. Even less genetic differences, it’s hardly inconceivable that some (immutable) phenotypic difference between twins partly causes some life event and the outcome of interest.

Most scientists surely know that. In general, the reason why you include an additional var. is because you have theoretical reason to believe it may have some predictive power. Models must be theoretically based. Also, if they want to include more independent/mediator var., this will only make things worse. Stationarity is an important, crucial assumption to be met in causal analysis. Another is the equality of stability across all of the variables (indep., mediator, dep.). And the more variables and waves you have, the more difficult all these conditions will be fulfilled. Best is to restrict your variables to the strict minimum.

Causality is so difficult to prove that I don’t like how some of them speak so lightly about relationship that starts with X, towards Z, through mediational Y.